Fusion Encoder Networks

Paper and Code

Mar 04, 2024

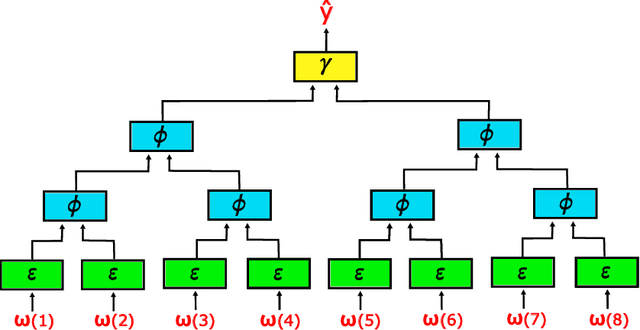

In this paper we present fusion encoder networks (FENs): a class of algorithms for creating neural networks that map sequences to outputs. The resulting neural network has only logarithmic depth (alleviating the degradation of data as it propagates through the network) and can process sequences in linear time (or in logarithmic time with a linear number of processors). The crucial property of FENs is that they learn by training a quasi-linear number of constant-depth feed-forward neural networks in parallel. The fact that these networks have constant depth means that backpropagation works well. We note that currently the performance of FENs is only conjectured as we are yet to implement them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge