Fusing Laser Scanner and Stereo Camera in Evidential Grid Maps

Paper and Code

Feb 22, 2019

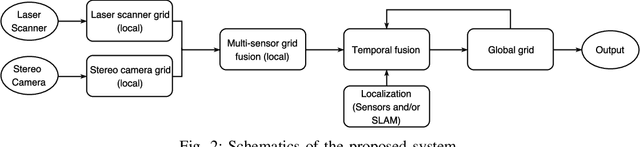

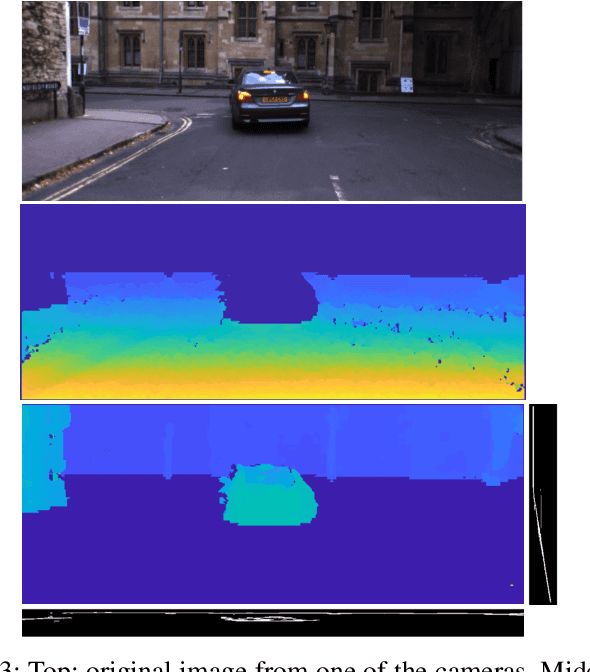

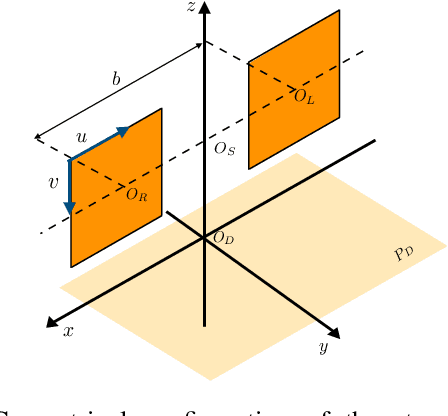

Automation driving techniques have seen tremendous progresses these last years, particularly due to a better perception of the environment. In order to provide safe yet not too conservative driving in complex urban environment, data fusion should not only consider redundant sensing to characterize the surrounding obstacles, but also be able to describe the uncertainties and errors beyond presence/absence (be it binary or probabilistic). This paper introduces an enriched representation of the world, more precisely of the potential existence of obstacles through an evidential grid map. A method to create this representation from 2 very different sensors, laser scanner and stereo camera, is presented along with algorithms for data fusion and temporal updates. This work allows a better handling of the dynamic aspects of the urban environment and a proper management of errors in order to create a more reliable map. We use the evidential framework based on the Dempster-Shafer theory to model the environment perception by the sensors. A new combination operator is proposed to merge the different sensor grids considering their distinct uncertainties. In addition, we introduce a new long-life layer with high level states that allows the maintenance of a global map of the entire vehicle's trajectory and distinguish between static and dynamic obstacles. Results on a real road dataset show that the environment mapping data can be improved by adding relevant information that could be missed without the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge