Fundamental Performance Limits on Terahertz Wireless Links Imposed by Group Velocity Dispersion

Paper and Code

Apr 26, 2021

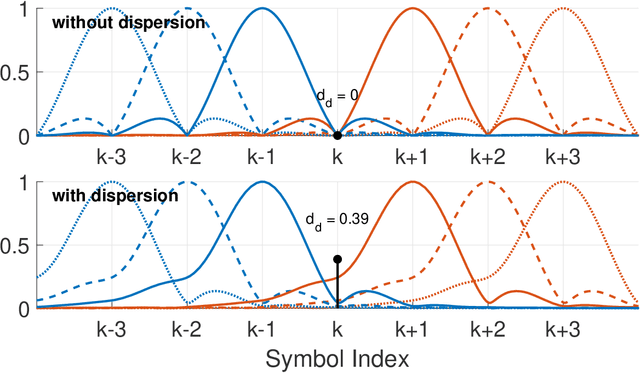

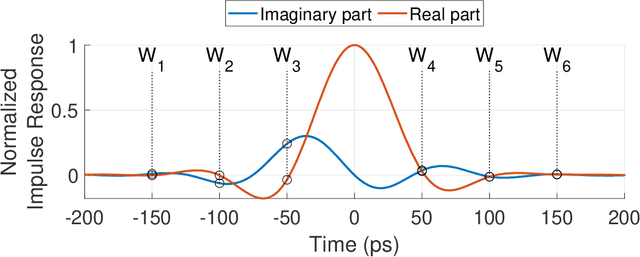

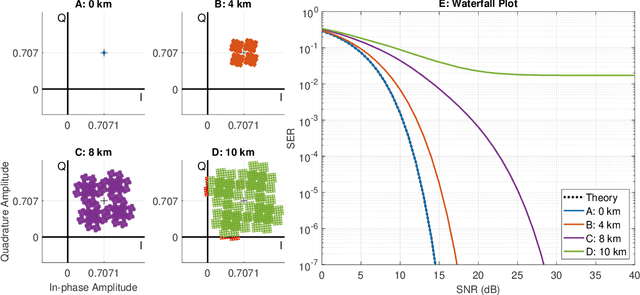

A theoretical framework and numerical simulations quantifying the impact of atmospheric group velocity dispersion on wireless terahertz communication link error rate were developed based upon experimental work. We present, for the first time, predictions of symbol error rate as a function of link distance, signal bandwidth, signal-to-noise ratio, and atmospheric conditions, revealing that long-distance, broadband terahertz communication systems may be limited by inter-symbol interference stemming from group velocity dispersion, rather than attenuation. In such dispersion limited links, increasing signal strength does not improve the symbol error rate and, consequently, theoretical predictions of symbol error rate based only on signal-to-noise ratio are invalid for the broadband case. This work establishes a new and necessary foundation for link budget analysis in future long-distance terahertz communication systems that accounts for the non-negligible effects of both attenuation and dispersion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge