From Gameplay to Symbolic Reasoning: Learning SAT Solver Heuristics in the Style of Alpha(Go) Zero

Paper and Code

Feb 14, 2018

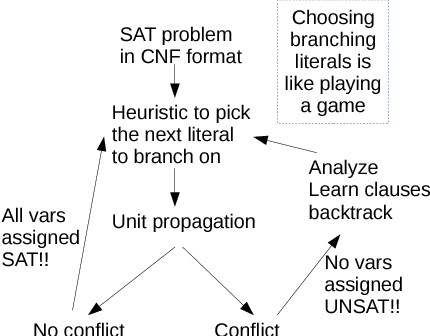

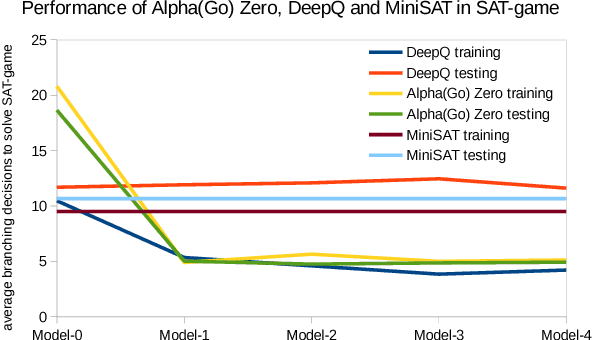

Despite the recent successes of deep neural networks in various fields such as image and speech recognition, natural language processing, and reinforcement learning, we still face big challenges in bringing the power of numeric optimization to symbolic reasoning. Researchers have proposed different avenues such as neural machine translation for proof synthesis, vectorization of symbols and expressions for representing symbolic patterns, and coupling of neural back-ends for dimensionality reduction with symbolic front-ends for decision making. However, these initial explorations are still only point solutions, and bear other shortcomings such as lack of correctness guarantees. In this paper, we present our approach of casting symbolic reasoning as games, and directly harnessing the power of deep reinforcement learning in the style of Alpha(Go) Zero on symbolic problems. Using the Boolean Satisfiability (SAT) problem as showcase, we demonstrate the feasibility of our method, and the advantages of modularity, efficiency, and correctness guarantees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge