From Checking to Inference: Actual Causality Computations as Optimization Problems

Paper and Code

Jul 06, 2020

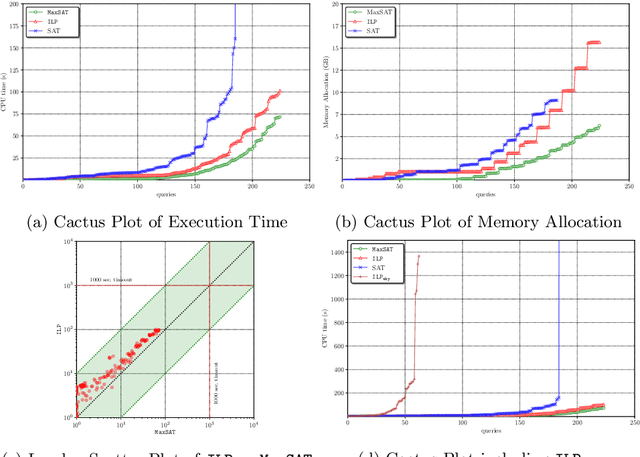

Actual causality is increasingly well understood. Recent formal approaches, proposed by Halpern and Pearl, have made this concept mature enough to be amenable to automated reasoning. Actual causality is especially vital for building accountable, explainable systems. Among other reasons, causality reasoning is computationally hard due to the requirements of counterfactuality and the minimality of causes. Previous approaches presented either inefficient or restricted, and domain-specific, solutions to the problem of automating causality reasoning. In this paper, we present a novel approach to formulate different notions of causal reasoning, over binary acyclic models, as optimization problems, based on quantifiable notions within counterfactual computations. We contribute and compare two compact, non-trivial, and sound integer linear programming (ILP) and Maximum Satisfiability (MaxSAT) encodings to check causality. Given a candidate cause, both approaches identify what a minimal cause is. Also, we present an ILP encoding to infer causality without requiring a candidate cause. We show that both notions are efficiently automated. Using models with more than $8000$ variables, checking is computed in a matter of seconds, with MaxSAT outperforming ILP in many cases. In contrast, inference is computed in a matter of minutes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge