Forced to Learn: Discovering Disentangled Representations Without Exhaustive Labels

Paper and Code

May 01, 2017

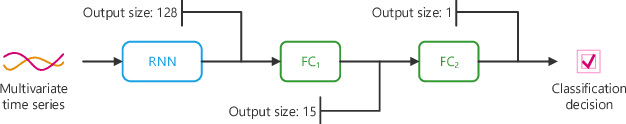

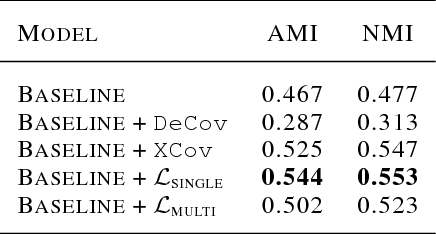

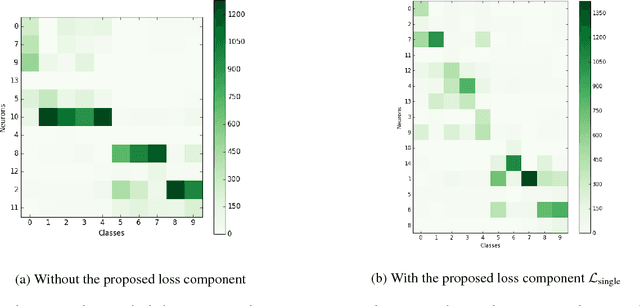

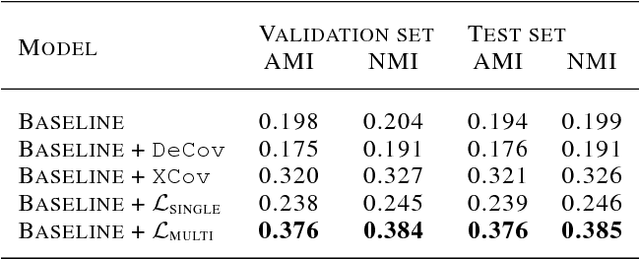

Learning a better representation with neural networks is a challenging problem, which was tackled extensively from different prospectives in the past few years. In this work, we focus on learning a representation that could be used for a clustering task and introduce two novel loss components that substantially improve the quality of produced clusters, are simple to apply to an arbitrary model and cost function, and do not require a complicated training procedure. We evaluate them on two most common types of models, Recurrent Neural Networks and Convolutional Neural Networks, showing that the approach we propose consistently improves the quality of KMeans clustering in terms of Adjusted Mutual Information score and outperforms previously proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge