FMCode: A 3D In-the-Air Finger Motion Based User Login Framework for Gesture Interface

Paper and Code

Aug 01, 2018

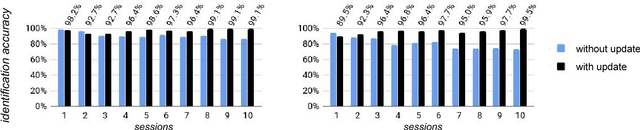

Applications using gesture-based human-computer interface require a new user login method with gestures because it does not have a traditional input method to type a password. However, due to various challenges, existing gesture-based authentication systems are generally considered too weak to be useful in practice. In this paper, we propose a unified user login framework using 3D in-air-handwriting, called FMCode. We define new types of features critical to distinguish legitimate users from attackers and utilize Support Vector Machine (SVM) for user authentication. The features and data-driven models are specially designed to accommodate minor behavior variations that existing gesture authentication methods neglect. In addition, we use deep neural network approaches to efficiently identify the user based on his or her in-air-handwriting, which avoids expansive account database search methods employed by existing work. On a dataset collected by us with over 100 users, our prototype system achieves 0.1% and 0.5% best Equal Error Rate (EER) for user authentication, as well as 96.7% and 94.3% accuracy for user identification, using two types of gesture input devices. Compared to existing behavioral biometric systems using gesture and in-air-handwriting, our framework achieves the state-of-the-art performance. In addition, our experimental results show that FMCode is capable to defend against client-side spoofing attacks, and it performs persistently in the long run. These results and discoveries pave the way to practical usage of gesture-based user login over the gesture interface.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge