Flow for Meta Control

Paper and Code

Jul 17, 2014

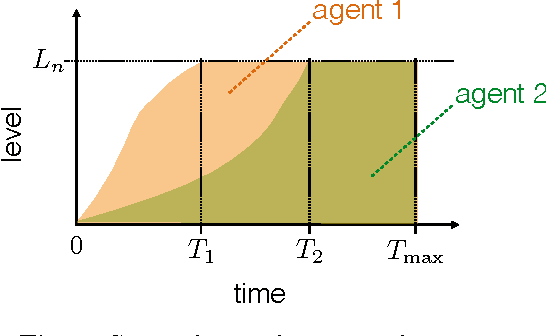

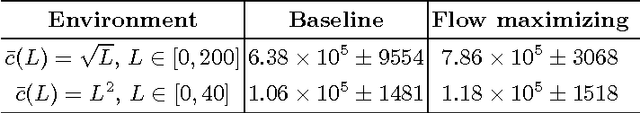

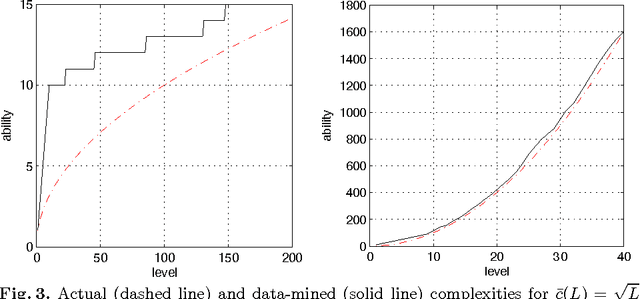

The psychological state of flow has been linked to optimizing human performance. A key condition of flow emergence is a match between the human abilities and complexity of the task. We propose a simple computational model of flow for Artificial Intelligence (AI) agents. The model factors the standard agent-environment state into a self-reflective set of the agent's abilities and a socially learned set of the environmental complexity. Maximizing the flow serves as a meta control for the agent. We show how to apply the meta-control policy to a broad class of AI control policies and illustrate our approach with a specific implementation. Results in a synthetic testbed are promising and open interesting directions for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge