Fixed points of monotonic and scalable neural networks

Paper and Code

Jul 01, 2021

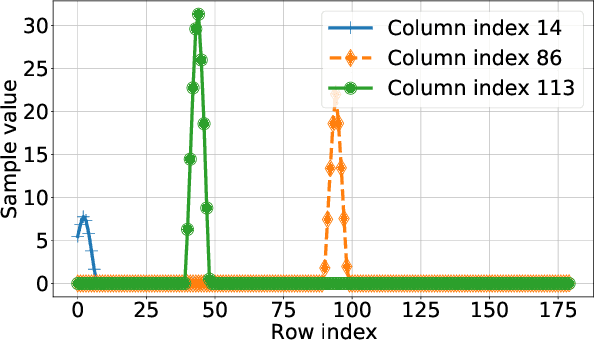

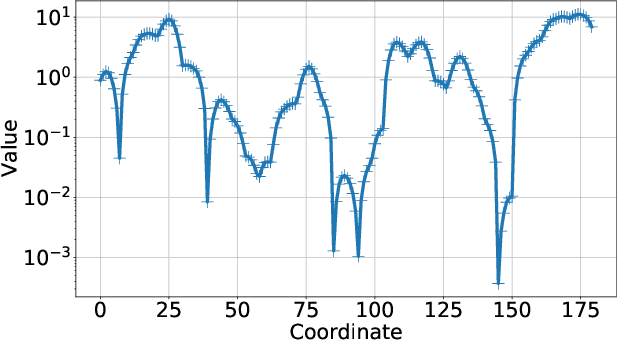

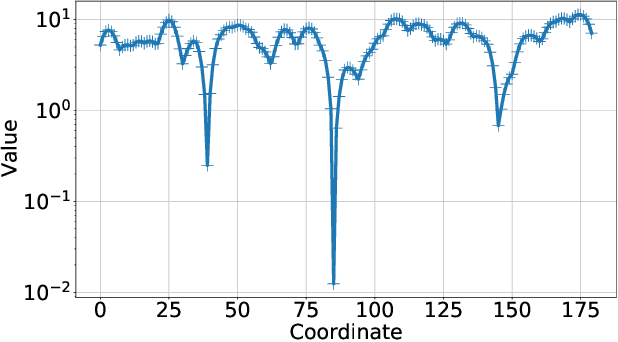

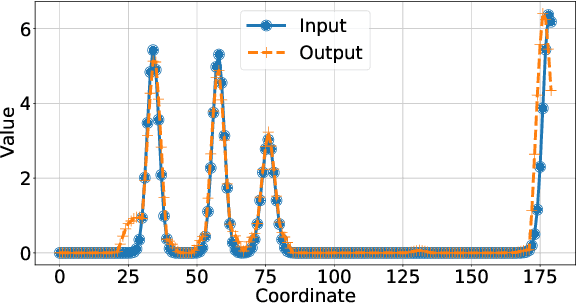

We derive conditions for the existence of fixed points of neural networks, an important research objective to understand their behavior in modern applications involving autoencoders and loop unrolling techniques, among others. In particular, we focus on networks with nonnegative inputs and nonnegative network parameters, as often considered in the literature. We show that such networks can be recognized as monotonic and (weakly) scalable functions within the framework of nonlinear Perron-Frobenius theory. This fact enables us to derive conditions for the existence of a nonempty fixed point set of the neural networks, and these conditions are weaker than those obtained recently using arguments in convex analysis, which are typically based on the assumption of nonexpansivity of the activation functions. Furthermore, we prove that the shape of the fixed point set of monotonic and weakly scalable neural networks is often an interval, which degenerates to a point for the case of scalable networks. The chief results of this paper are verified in numerical simulations, where we consider an autoencoder-type network that first compresses angular power spectra in massive MIMO systems, and, second, reconstruct the input spectra from the compressed signal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge