Finite Dictionary Variants of the Diffusion KLMS Algorithm

Paper and Code

Sep 09, 2015

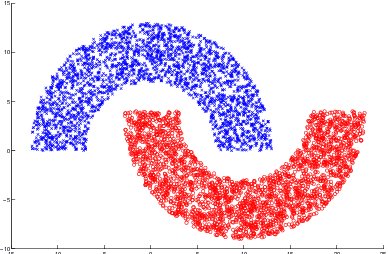

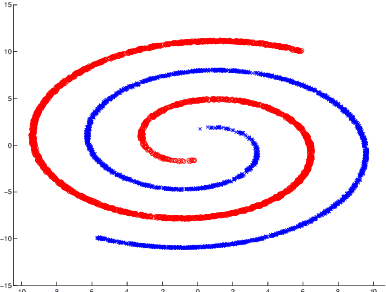

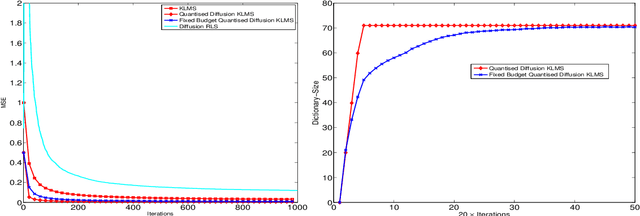

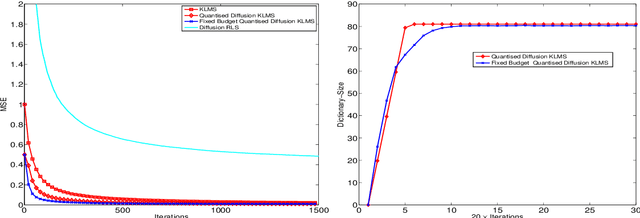

The diffusion based distributed learning approaches have been found to be a viable solution for learning over linearly separable datasets over a network. However, approaches till date are suitable for linearly separable datasets and need to be extended to scenarios in which we need to learn a non-linearity. In such scenarios, the recently proposed diffusion kernel least mean squares (KLMS) has been found to be performing better than diffusion least mean squares (LMS). The drawback of diffusion KLMS is that it requires infinite storage for observations (also called dictionary). This paper formulates the diffusion KLMS in a fixed budget setting such that the storage requirement is curtailed while maintaining appreciable performance in terms of convergence. Simulations have been carried out to validate the two newly proposed algorithms named as quantised diffusion KLMS (QDKLMS) and fixed budget diffusion KLMS (FBDKLMS) against KLMS, which indicate that both the proposed algorithms deliver better performance as compared to the KLMS while reducing the dictionary size storage requirement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge