Fine color guidance in diffusion models and its application to image compression at extremely low bitrates

Paper and Code

Apr 10, 2024

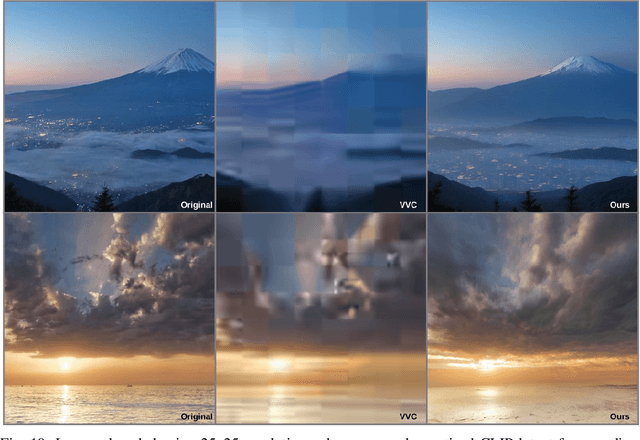

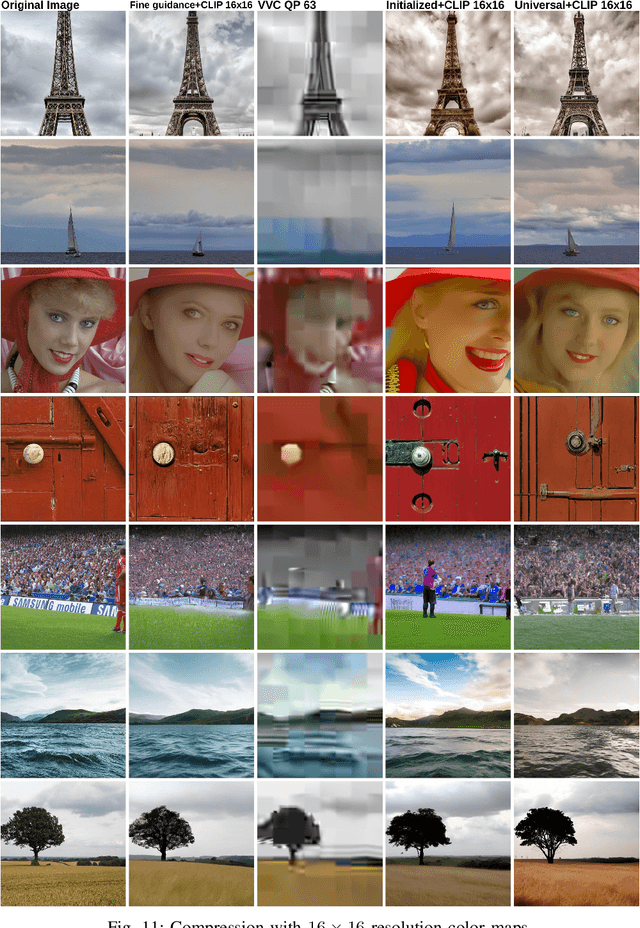

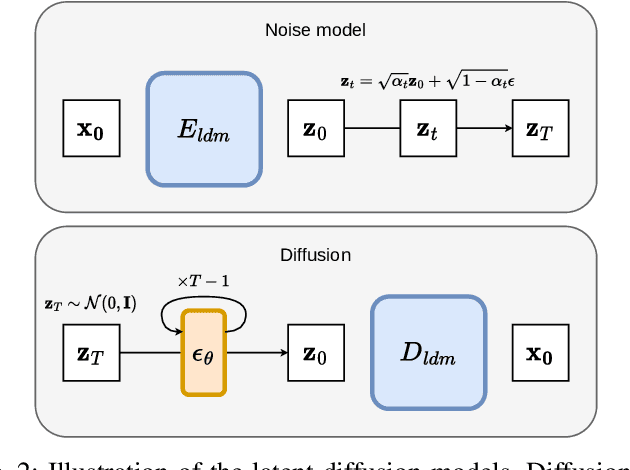

This study addresses the challenge of, without training or fine-tuning, controlling the global color aspect of images generated with a diffusion model. We rewrite the guidance equations to ensure that the outputs are closer to a known color map, and this without hindering the quality of the generation. Our method leads to new guidance equations. We show in the color guidance context that, the scaling of the guidance should not decrease but remains high throughout the diffusion process. In a second contribution, our guidance is applied in a compression framework, we combine both semantic and general color information on the image to decode the images at low cost. We show that our method is effective at improving fidelity and realism of compressed images at extremely low bit rates, when compared to other classical or more semantic oriented approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge