Finding hidden-feature depending laws inside a data set and classifying it using Neural Network

Paper and Code

Jan 25, 2021

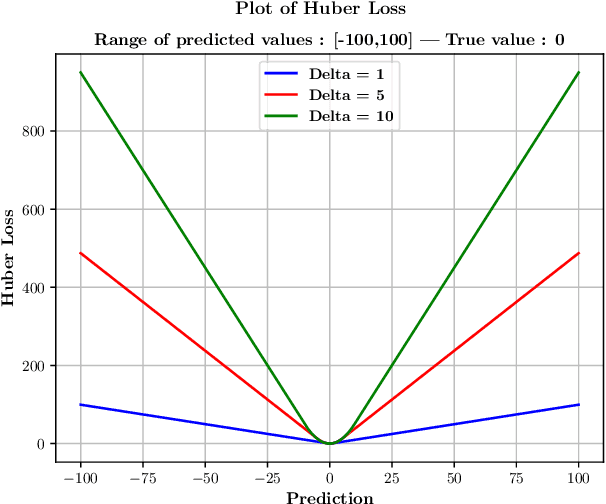

The logcosh loss function for neural networks has been developed to combine the advantage of the absolute error loss function of not overweighting outliers with the advantage of the mean square error of continuous derivative near the mean, which makes the last phase of learning easier. It is clear, and one experiences it soon, that in the case of clustered data, an artificial neural network with logcosh loss learns the bigger cluster rather than the mean of the two. Even more so, the ANN, when used for regression of a set-valued function, will learn a value close to one of the choices, in other words, one branch of the set-valued function, while a mean-square-error NN will learn the value in between. This work suggests a method that uses artificial neural networks with logcosh loss to find the branches of set-valued mappings in parameter-outcome sample sets and classifies the samples according to those branches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge