Filter design for small target detection on infrared imagery using normalized-cross-correlation layer

Paper and Code

Jun 15, 2020

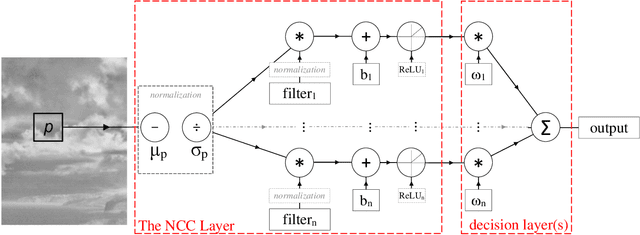

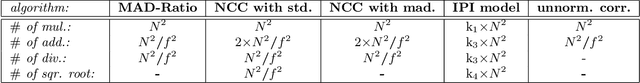

In this paper, we introduce a machine learning approach to the problem of infrared small target detection filter design. For this purpose, similarly to a convolutional layer of a neural network, the normalized-cross-correlational (NCC) layer, which we utilize for designing a target detection/recognition filter bank, is proposed. By employing the NCC layer in a neural network structure, we introduce a framework, in which supervised training is used to calculate the optimal filter shape and the optimum number of filters required for a specific target detection/recognition task on infrared images. We also propose the mean-absolute-deviation NCC (MAD-NCC) layer, an efficient implementation of the proposed NCC layer, designed especially for FPGA systems, in which square root operations are avoided for real-time computation. As a case study we work on dim-target detection on mid-wave infrared imagery and obtain the filters that can discriminate a dim target from various types of background clutter, specific to our operational concept.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge