Fighting Contextual Bandits with Stochastic Smoothing

Paper and Code

Oct 11, 2018

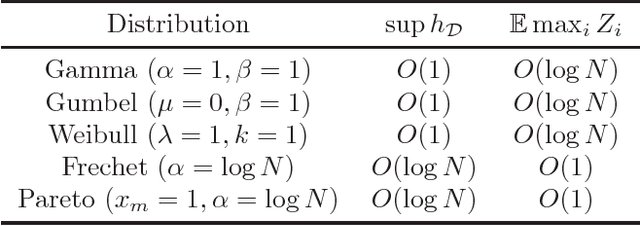

We introduce a new stochastic smoothing perspective to study adversarial contextual bandit problems. We propose a general algorithm template that represents random perturbation based algorithms and identify several perturbation distributions that lead to strong regret bounds. Using the idea of smoothness, we provide an $O(\sqrt{T})$ zero-order bound for the vanilla algorithm and an $O(L^{*2/3}_{T})$ first-order bound for the clipped version. These bounds hold when the algorithms use with a variety of distributions that have a bounded hazard rate. Our algorithm template includes EXP4 as a special case corresponding to the Gumbel perturbation. Our regret bounds match existing results for EXP4 without relying on the specific properties of the algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge