Fibonacci and k-Subsecting Recursive Feature Elimination

Paper and Code

Jul 29, 2020

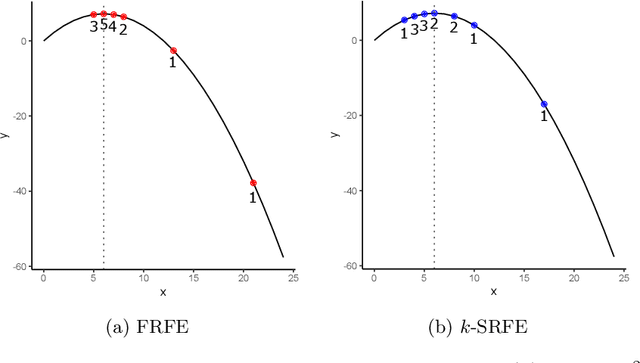

Feature selection is a data mining task with the potential of speeding up classification algorithms, enhancing model comprehensibility, and improving learning accuracy. However, finding a subset of features that is optimal in terms of predictive accuracy is usually computationally intractable. Out of several heuristic approaches to dealing with this problem, the Recursive Feature Elimination (RFE) algorithm has received considerable interest from data mining practitioners. In this paper, we propose two novel algorithms inspired by RFE, called Fibonacci- and k-Subsecting Recursive Feature Elimination, which remove features in logarithmic steps, probing the wrapped classifier more densely for the more promising feature subsets. The proposed algorithms are experimentally compared against RFE on 28 highly multidimensional datasets and evaluated in a practical case study involving 3D electron density maps from the Protein Data Bank. The results show that Fibonacci and k-Subsecting Recursive Feature Elimination are capable of selecting a smaller subset of features much faster than standard RFE, while achieving comparable predictive performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge