FedQV: Leveraging Quadratic Voting in Federated Learning

Paper and Code

Jan 02, 2024

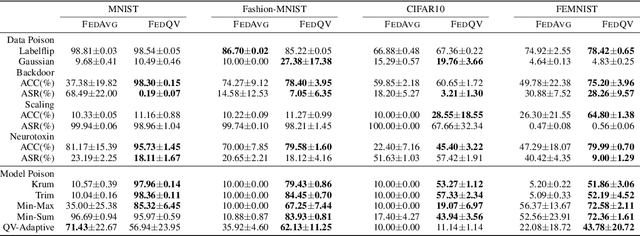

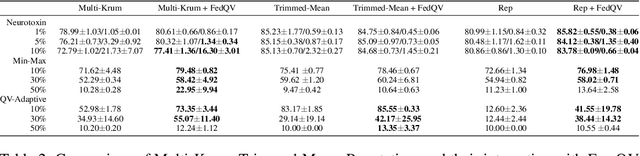

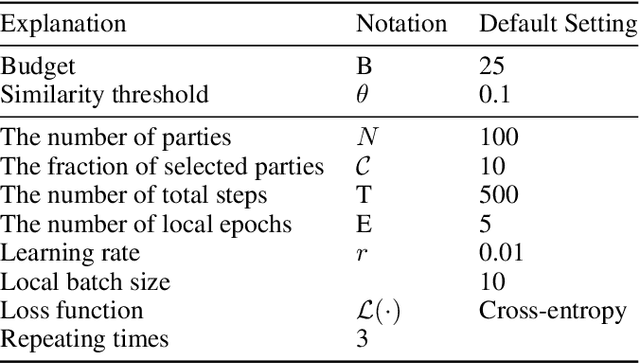

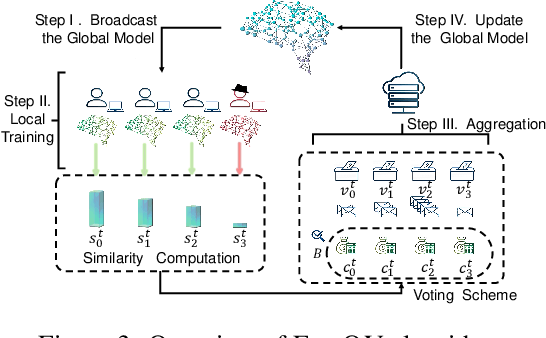

Federated Learning (FL) permits different parties to collaboratively train a global model without disclosing their respective local labels. A crucial step of FL, that of aggregating local models to produce the global one, shares many similarities with public decision-making, and elections in particular. In that context, a major weakness of FL, namely its vulnerability to poisoning attacks, can be interpreted as a consequence of the one person one vote (henceforth 1p1v) principle underpinning most contemporary aggregation rules. In this paper, we propose FedQV, a novel aggregation algorithm built upon the quadratic voting scheme, recently proposed as a better alternative to 1p1v-based elections. Our theoretical analysis establishes that FedQV is a truthful mechanism in which bidding according to one's true valuation is a dominant strategy that achieves a convergence rate that matches those of state-of-the-art methods. Furthermore, our empirical analysis using multiple real-world datasets validates the superior performance of FedQV against poisoning attacks. It also shows that combining FedQV with unequal voting ``budgets'' according to a reputation score increases its performance benefits even further. Finally, we show that FedQV can be easily combined with Byzantine-robust privacy-preserving mechanisms to enhance its robustness against both poisoning and privacy attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge