Features Reconstruction Disentanglement Cloth-Changing Person Re-Identification

Paper and Code

Jul 15, 2024

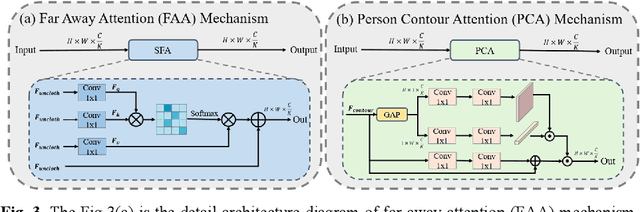

Cloth-changing person re-identification (CC-ReID) aims to retrieve specific pedestrians in a cloth-changing scenario. Its main challenge is to disentangle the clothing-related and clothing-unrelated features. Most existing approaches force the model to learn clothing-unrelated features by changing the color of the clothes. However, due to the lack of ground truth, these methods inevitably introduce noise, which destroys the discriminative features and leads to an uncontrollable disentanglement process. In this paper, we propose a new person re-identification network called features reconstruction disentanglement ReID (FRD-ReID), which can controllably decouple the clothing-unrelated and clothing-related features. Specifically, we first introduce the human parsing mask as the ground truth of the reconstruction process. At the same time, we propose the far away attention (FAA) mechanism and the person contour attention (PCA) mechanism for clothing-unrelated features and pedestrian contour features to improve the feature reconstruction efficiency. In the testing phase, we directly discard the clothing-related features for inference,which leads to a controllable disentanglement process. We conducted extensive experiments on the PRCC, LTCC, and Vc-Clothes datasets and demonstrated that our method outperforms existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge