Feature Products Yield Efficient Networks

Paper and Code

Aug 18, 2020

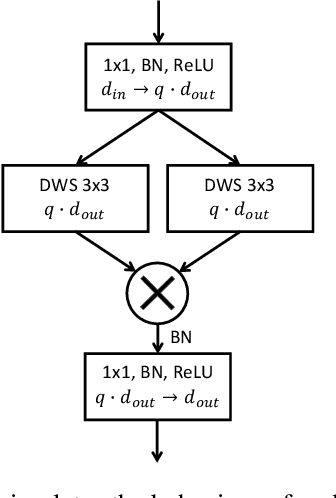

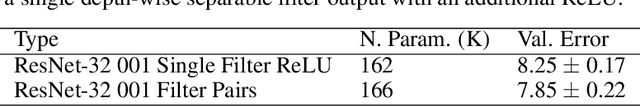

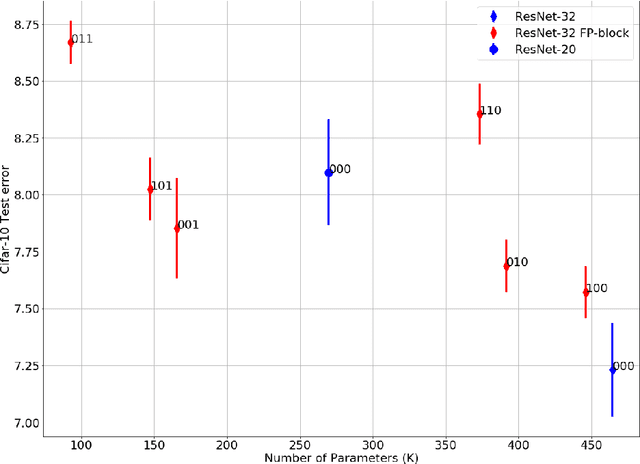

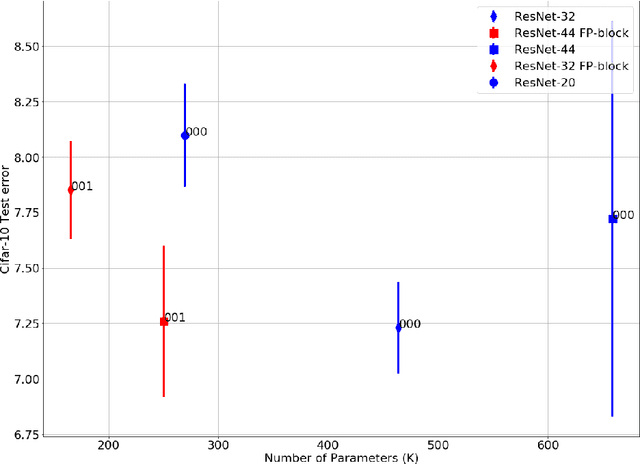

We introduce Feature-Product networks (FP-nets) as a novel deep-network architecture based on a new building block inspired by principles of biological vision. For each input feature map, a so-called FP-block learns two different filters, the outputs of which are then multiplied. Such FP-blocks are inspired by models of end-stopped neurons, which are common in cortical areas V1 and especially in V2. Convolutional neural networks can be transformed into parameter-efficient FP-nets by substituting conventional blocks of regular convolutions with FP-blocks. In this way, we create several novel FP-nets based on state-of-the-art networks and evaluate them on the Cifar-10 and ImageNet challenges. We show that the use of FP-blocks reduces the number of parameters significantly without decreasing generalization capability. Since so far heuristics and search algorithms have been used to find more efficient networks, it seems remarkable that we can obtain even more efficient networks based on a novel bio-inspired design principle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge