Fast Unconstrained Optimization via Hessian Averaging and Adaptive Gradient Sampling Methods

Paper and Code

Aug 14, 2024

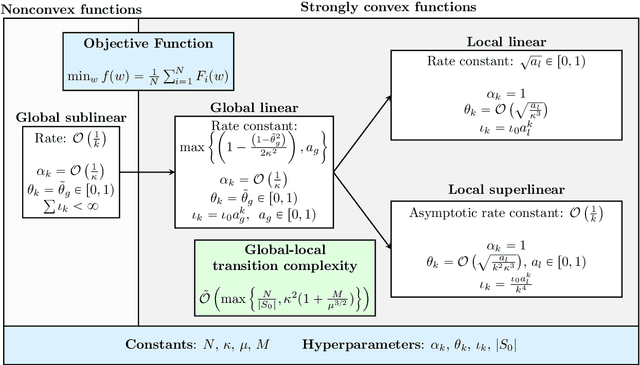

We consider minimizing finite-sum and expectation objective functions via Hessian-averaging based subsampled Newton methods. These methods allow for gradient inexactness and have fixed per-iteration Hessian approximation costs. The recent work (Na et al. 2023) demonstrated that Hessian averaging can be utilized to achieve fast $\mathcal{O}\left(\sqrt{\tfrac{\log k}{k}}\right)$ local superlinear convergence for strongly convex functions in high probability, while maintaining fixed per-iteration Hessian costs. These methods, however, require gradient exactness and strong convexity, which poses challenges for their practical implementation. To address this concern we consider Hessian-averaged methods that allow gradient inexactness via norm condition based adaptive-sampling strategies. For the finite-sum problem we utilize deterministic sampling techniques which lead to global linear and sublinear convergence rates for strongly convex and nonconvex functions respectively. In this setting we are able to derive an improved deterministic local superlinear convergence rate of $\mathcal{O}\left(\tfrac{1}{k}\right)$. For the %expected risk expectation problem we utilize stochastic sampling techniques, and derive global linear and sublinear rates for strongly convex and nonconvex functions, as well as a $\mathcal{O}\left(\tfrac{1}{\sqrt{k}}\right)$ local superlinear convergence rate, all in expectation. We present novel analysis techniques that differ from the previous probabilistic results. Additionally, we propose scalable and efficient variations of these methods via diagonal approximations and derive the novel diagonally-averaged Newton (Dan) method for large-scale problems. Our numerical results demonstrate that the Hessian averaging not only helps with convergence, but can lead to state-of-the-art performance on difficult problems such as CIFAR100 classification with ResNets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge