Failures in Perspective-taking of Multimodal AI Systems

Paper and Code

Sep 20, 2024

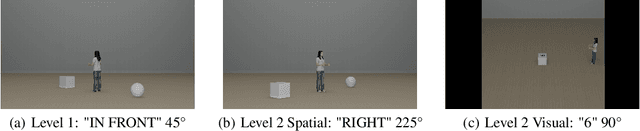

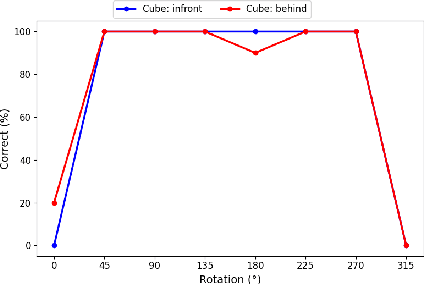

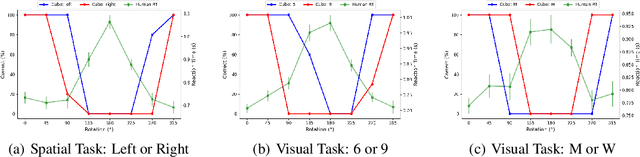

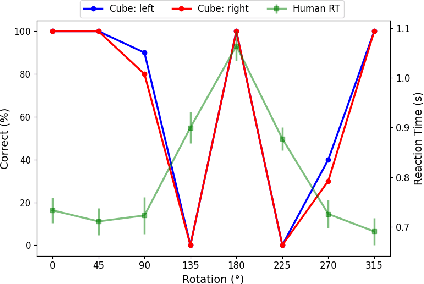

This study extends previous research on spatial representations in multimodal AI systems. Although current models demonstrate a rich understanding of spatial information from images, this information is rooted in propositional representations, which differ from the analog representations employed in human and animal spatial cognition. To further explore these limitations, we apply techniques from cognitive and developmental science to assess the perspective-taking abilities of GPT-4o. Our analysis enables a comparison between the cognitive development of the human brain and that of multimodal AI, offering guidance for future research and model development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge