Face Transformer for Recognition

Paper and Code

Apr 13, 2021

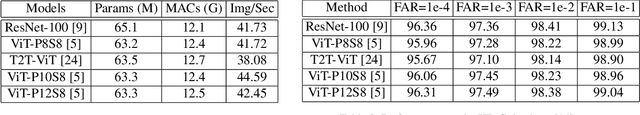

Recently there has been a growing interest in Transformer not only in NLP but also in computer vision. We wonder if transformer can be used in face recognition and whether it is better than CNNs. Therefore, we investigate the performance of Transformer models in face recognition. Considering the original Transformer may neglect the inter-patch information, we modify the patch generation process and make the tokens with sliding patches which overlaps with each others. The models are trained on CASIA-WebFace and MS-Celeb-1M databases, and evaluated on several mainstream benchmarks, including LFW, SLLFW, CALFW, CPLFW, TALFW, CFP-FP, AGEDB and IJB-C databases. We demonstrate that Face Transformer models trained on a large-scale database, MS-Celeb-1M, achieve comparable performance as CNN with similar number of parameters and MACs. To facilitate further researches, Face Transformer models and codes are available at https://github.com/zhongyy/Face-Transformer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge