Extended Radial Basis Function Controller for Reinforcement Learning

Paper and Code

Sep 12, 2020

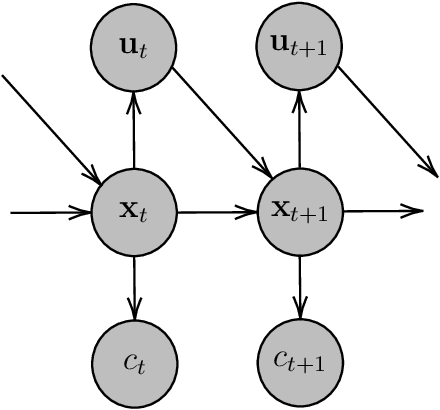

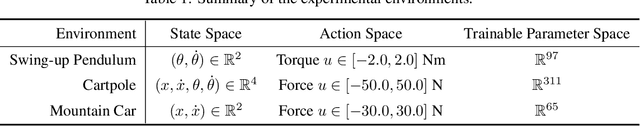

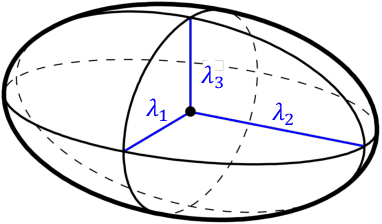

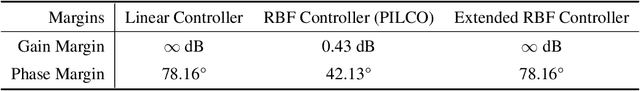

There have been attempts in model-based reinforcement learning to exploit a priori knowledge about the structure of the system. This paper introduces the extended radial basis function (RBF) controller design. In addition to traditional RBF controllers, our controller comprises of an engineered linear controller inside an operating region. We show that the learnt extended RBF controller takes on the desirable characteristics of both the linear and non-linear controller models. The extended controller is shown to retain the ability for universal function approximation of the non-linear RBF functions. At the same time, it demonstrates desirable stability criteria on par with the linear controller. Learning has been done in a probabilistic inference framework (PILCO), but could generalise to other reinforcement learning frameworks. Experimental results from the Swing-up pendulum, Cartpole, and Mountain car environments are reported.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge