Exploring the Relationship Between Feature Attribution Methods and Model Performance

Paper and Code

May 22, 2024

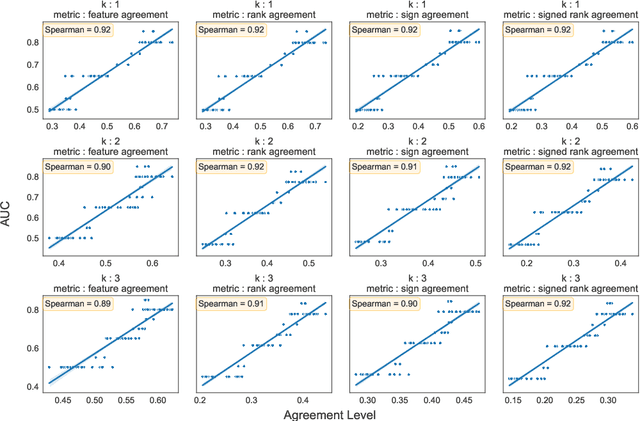

Machine learning and deep learning models are pivotal in educational contexts, particularly in predicting student success. Despite their widespread application, a significant gap persists in comprehending the factors influencing these models' predictions, especially in explainability within education. This work addresses this gap by employing nine distinct explanation methods and conducting a comprehensive analysis to explore the correlation between the agreement among these methods in generating explanations and the predictive model's performance. Applying Spearman's correlation, our findings reveal a very strong correlation between the model's performance and the agreement level observed among the explanation methods.

* AAAI2024 Workshop on AI for Education - Bridging Innovation and

Responsibility

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge