Exploring Feature-based Knowledge Distillation For Recommender System: A Frequency Perspective

Paper and Code

Nov 16, 2024

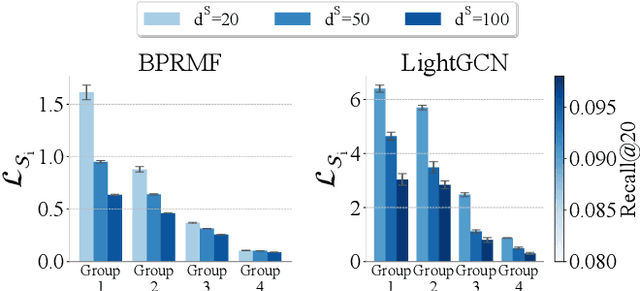

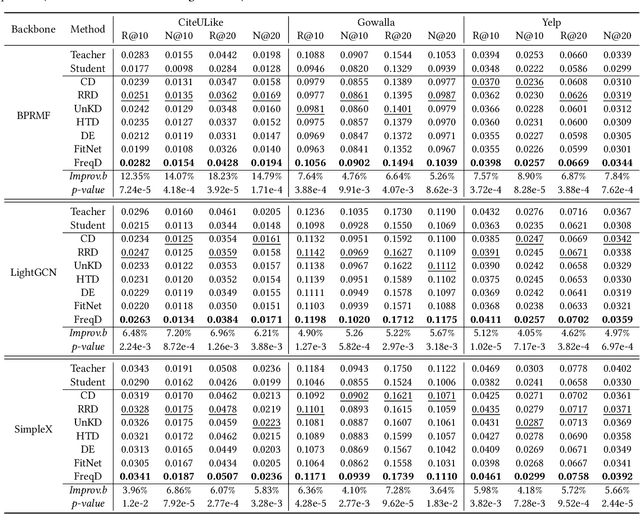

In this paper, we analyze the feature-based knowledge distillation for recommendation from the frequency perspective. By defining knowledge as different frequency components of the features, we theoretically demonstrate that regular feature-based knowledge distillation is equivalent to equally minimizing losses on all knowledge and further analyze how this equal loss weight allocation method leads to important knowledge being overlooked. In light of this, we propose to emphasize important knowledge by redistributing knowledge weights. Furthermore, we propose FreqD, a lightweight knowledge reweighting method, to avoid the computational cost of calculating losses on each knowledge. Extensive experiments demonstrate that FreqD consistently and significantly outperforms state-of-the-art knowledge distillation methods for recommender systems. Our code is available at \url{https://anonymous.4open.science/r/FreqKD/}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge