Exploring Emotions in Multi-componential Space using Interactive VR Games

Paper and Code

Apr 04, 2024

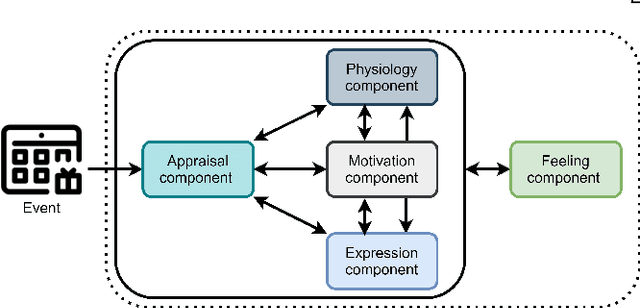

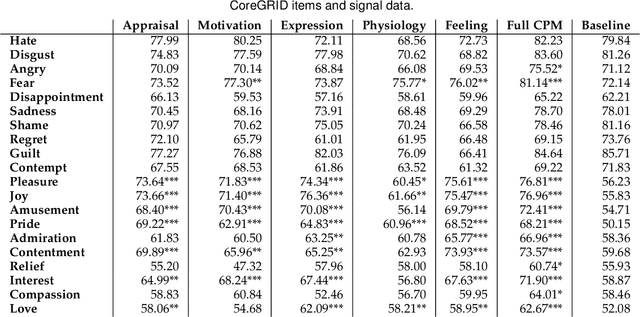

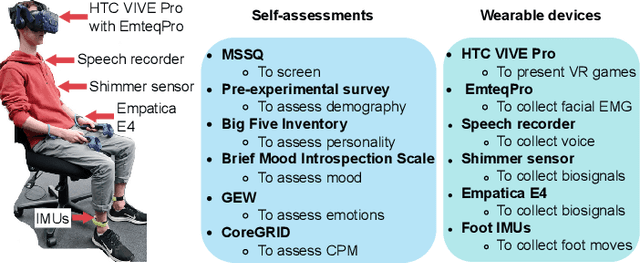

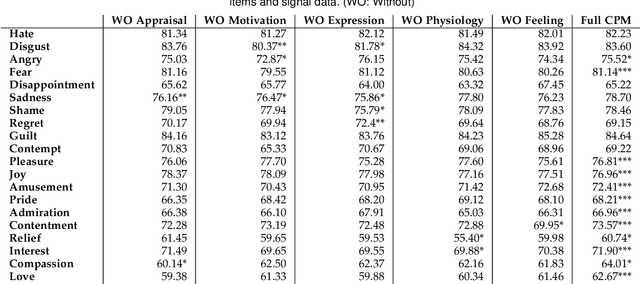

Emotion understanding is a complex process that involves multiple components. The ability to recognise emotions not only leads to new context awareness methods but also enhances system interaction's effectiveness by perceiving and expressing emotions. Despite the attention to discrete and dimensional models, neuroscientific evidence supports those emotions as being complex and multi-faceted. One framework that resonated well with such findings is the Component Process Model (CPM), a theory that considers the complexity of emotions with five interconnected components: appraisal, expression, motivation, physiology and feeling. However, the relationship between CPM and discrete emotions has not yet been fully explored. Therefore, to better understand emotions underlying processes, we operationalised a data-driven approach using interactive Virtual Reality (VR) games and collected multimodal measures (self-reports, physiological and facial signals) from 39 participants. We used Machine Learning (ML) methods to identify the unique contributions of each component to emotion differentiation. Our results showed the role of different components in emotion differentiation, with the model including all components demonstrating the most significant contribution. Moreover, we found that at least five dimensions are needed to represent the variation of emotions in our dataset. These findings also have implications for using VR environments in emotion research and highlight the role of physiological signals in emotion recognition within such environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge