Exploration on Grounded Word Embedding: Matching Words and Images with Image-Enhanced Skip-Gram Model

Paper and Code

Sep 08, 2018

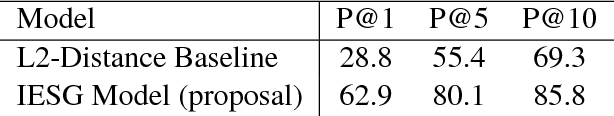

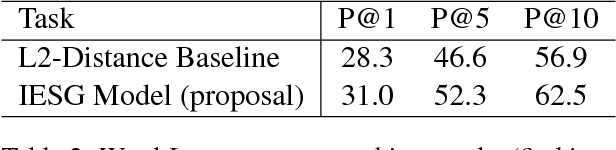

Word embedding is designed to represent the semantic meaning of a word with low dimensional vectors. The state-of-the-art methods of learning word embeddings (word2vec and GloVe) only use the word co-occurrence information. The learned embeddings are real number vectors, which are obscure to human. In this paper, we propose an Image-Enhanced Skip-Gram Model to learn grounded word embeddings by representing the word vectors in the same hyper-plane with image vectors. Experiments show that the image vectors and word embeddings learned by our model are highly correlated, which indicates that our model is able to provide a vivid image-based explanation to the word embeddings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge