Explaining Local, Global, And Higher-Order Interactions In Deep Learning

Paper and Code

Jun 12, 2020

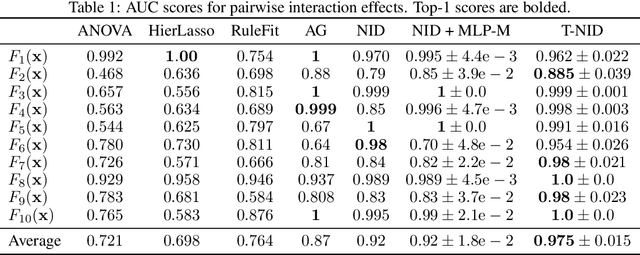

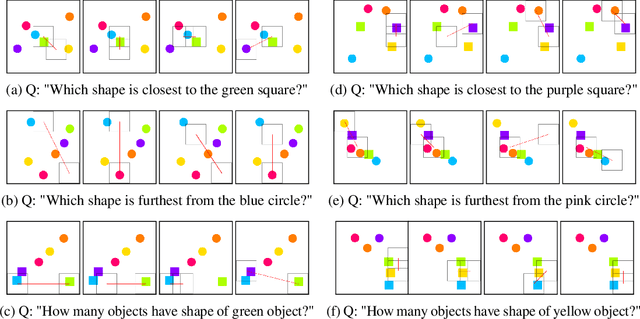

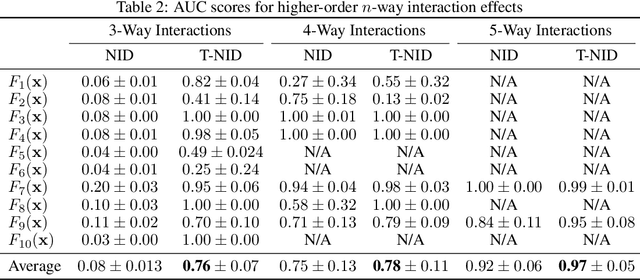

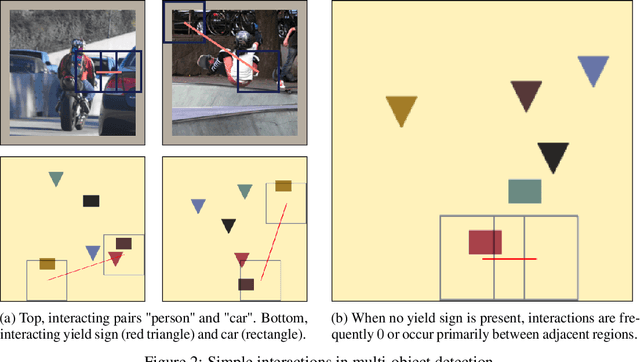

We present a simple yet highly generalizable method for explaining interacting parts within a neural network's reasoning process. In this work, we consider local, global, and higher-order statistical interactions. Generally speaking, local interactions occur between features within individual datapoints, while global interactions come in the form of universal features across the whole dataset. With deep learning, combined with some heuristics for tractability, we achieve state of the art measurement of global statistical interaction effects, including at higher orders (3-way interactions or more). We generalize this to the multidimensional setting to explain local interactions in multi-object detection and relational reasoning using the COCO annotated-image and Sort-Of-CLEVR toy datasets respectively. Here, we submit a new task for testing feature vector interactions, conduct a human study, propose a novel metric for relational reasoning, and use our interaction interpretations to innovate a more effective Relation Network. Finally, we apply these techniques on a real-world biomedical dataset to discover the higher-order interactions underlying Parkinson's disease clinical progression. Code for all experiments, fully reproducible, is available at: https://github.com/slerman12/ExplainingInteractions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge