Explainable Machine Learning for Public Policy: Use Cases, Gaps, and Research Directions

Paper and Code

Oct 27, 2020

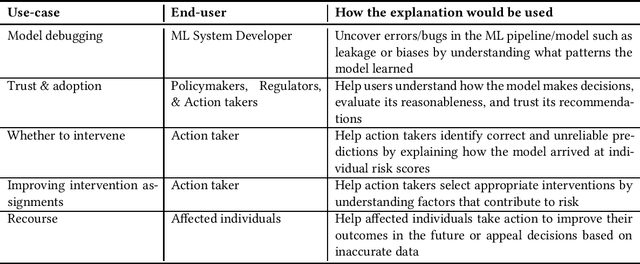

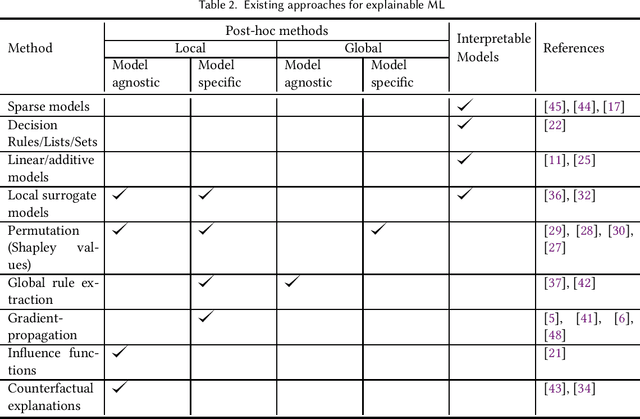

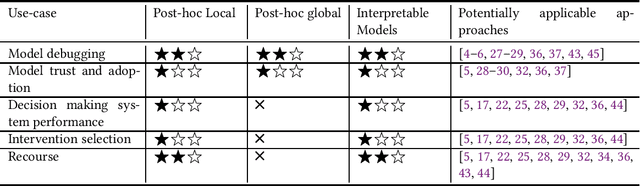

In Machine Learning (ML) models used for supporting decisions in high-stakes domains such as public policy, explainability is crucial for adoption and effectiveness. While the field of explainable ML has expanded in recent years, much of this work does not take real-world needs into account. A majority of proposed methods use benchmark ML problems with generic explainability goals without clear use-cases or intended end-users. As a result, the effectiveness of this large body of theoretical and methodological work on real-world applications is unclear. This paper focuses on filling this void for the domain of public policy. We develop a taxonomy of explainability use-cases within public policy problems; for each use-case, we define the end-users of explanations and the specific goals explainability has to fulfill; third, we map existing work to these use-cases, identify gaps, and propose research directions to fill those gaps in order to have practical policy impact through ML.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge