Explainable Artificial Intelligence Recommendation System by Leveraging the Semantics of Adverse Childhood Experiences: Proof-of-Concept Prototype Development

Paper and Code

Nov 06, 2020

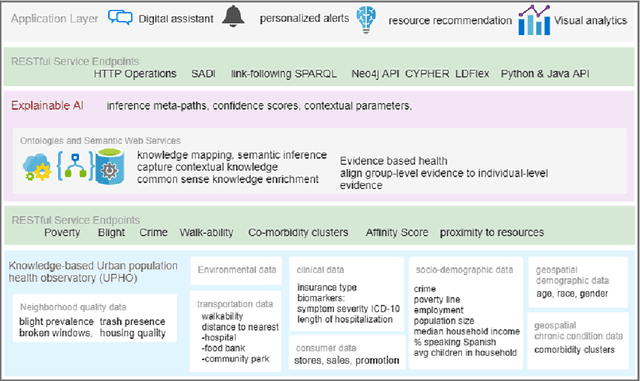

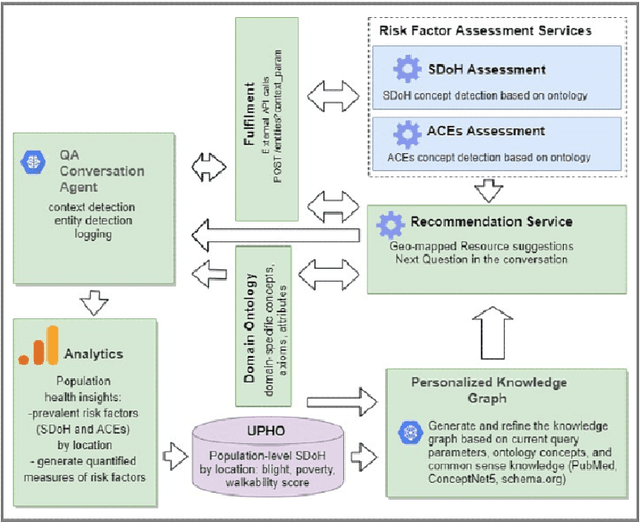

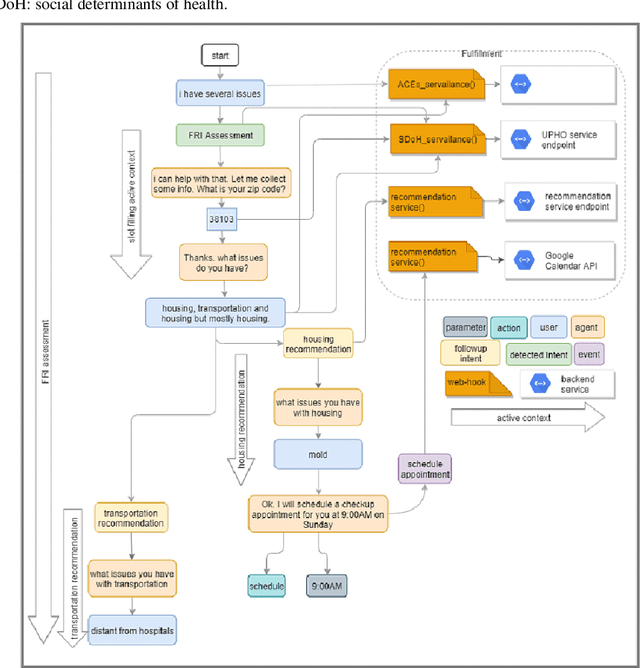

The study of adverse childhood experiences and their consequences has emerged over the past 20 years. In this study, we aimed to leverage explainable artificial intelligence, and propose a proof-of-concept prototype for a knowledge-driven evidence-based recommendation system to improve surveillance of adverse childhood experiences. We used concepts from an ontology that we have developed to build and train a question-answering agent using the Google DialogFlow engine. In addition to the question-answering agent, the initial prototype includes knowledge graph generation and recommendation components that leverage third-party graph technology. To showcase the framework functionalities, we here present a prototype design and demonstrate the main features through four use case scenarios motivated by an initiative currently implemented at a children hospital in Memphis, Tennessee. Ongoing development of the prototype requires implementing an optimization algorithm of the recommendations, incorporating a privacy layer through a personal health library, and conducting a clinical trial to assess both usability and usefulness of the implementation. This semantic-driven explainable artificial intelligence prototype can enhance health care practitioners ability to provide explanations for the decisions they make.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge