Event Camera Simulator Design for Modeling Attention-based Inference Architectures

Paper and Code

May 03, 2021

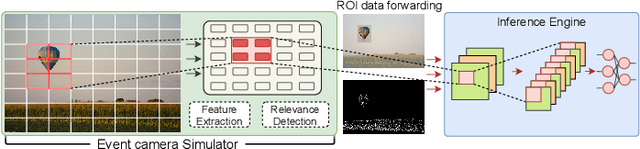

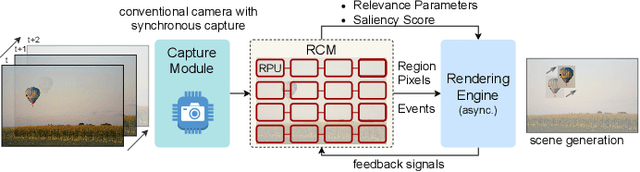

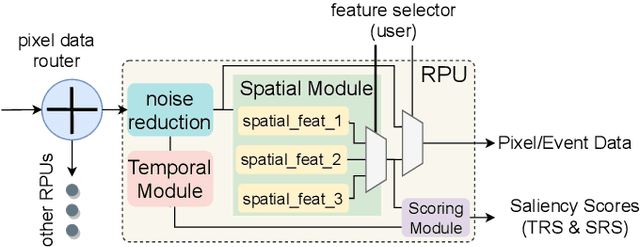

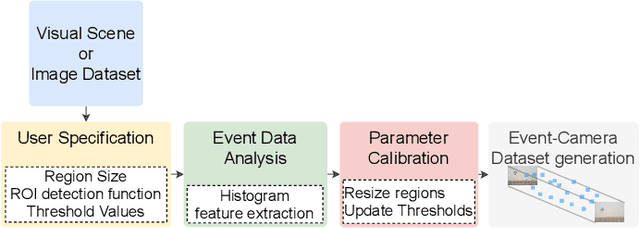

In recent years, there has been a growing interest in realizing methodologies to integrate more and more computation at the level of the image sensor. The rising trend has seen an increased research interest in developing novel event cameras that can facilitate CNN computation directly in the sensor. However, event-based cameras are not generally available in the market, limiting performance exploration on high-level models and algorithms. This paper presents an event camera simulator that can be a potent tool for hardware design prototyping, parameter optimization, attention-based innovative algorithm development, and benchmarking. The proposed simulator implements a distributed computation model to identify relevant regions in an image frame. Our simulator's relevance computation model is realized as a collection of modules and performs computations in parallel. The distributed computation model is configurable, making it highly useful for design space exploration. The Rendering engine of the simulator samples frame-regions only when there is a new event. The simulator closely emulates an image processing pipeline similar to that of physical cameras. Our experimental results show that the simulator can effectively emulate event vision with low overheads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge