Evaluating Prompts Across Multiple Choice Tasks In a Zero-Shot Setting

Paper and Code

Mar 29, 2022

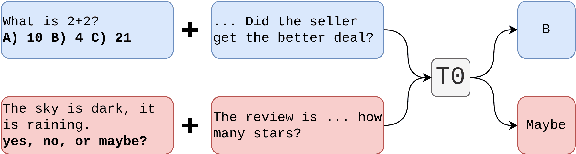

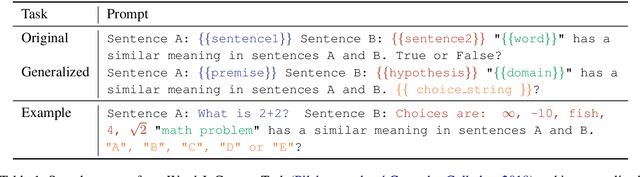

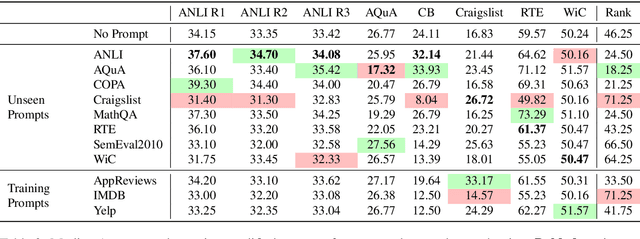

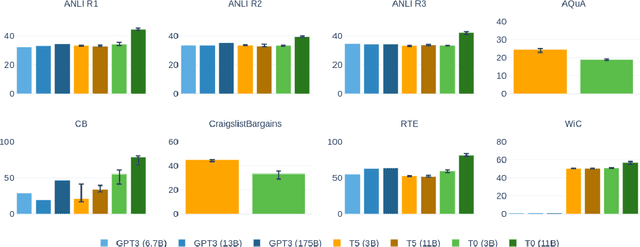

Large language models have shown that impressive zero-shot performance can be achieved through natural language prompts (Radford et al., 2019; Brown et al., 2020; Sanh et al., 2021). Creating an effective prompt, however, requires significant trial and error. That \textit{prompts} the question: how do the qualities of a prompt effects its performance? To this end, we collect and standardize prompts from a diverse range of tasks for use with tasks they were not designed for. We then evaluate these prompts across fixed multiple choice datasets for a quantitative analysis of how certain attributes of a prompt affect performance. We find that including the choices and using prompts not used during pre-training provide significant improvements. All experiments and code can be found https://github.com/gabeorlanski/zero-shot-cross-task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge