Evaluating Neighbor Explainability for Graph Neural Networks

Paper and Code

Nov 14, 2023

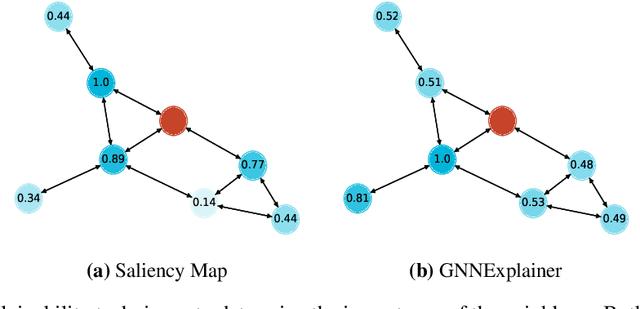

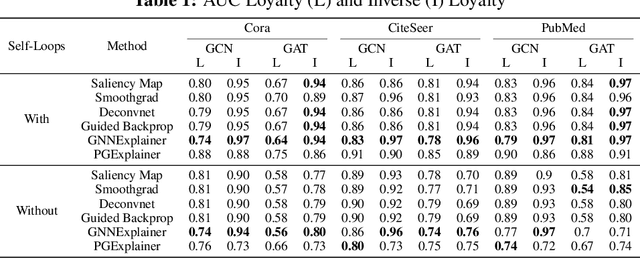

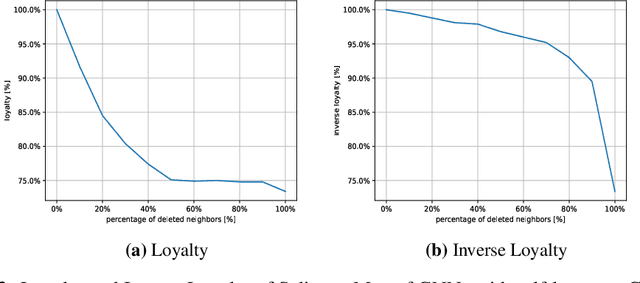

Explainability in Graph Neural Networks (GNNs) is a new field growing in the last few years. In this publication we address the problem of determining how important is each neighbor for the GNN when classifying a node and how to measure the performance for this specific task. To do this, various known explainability methods are reformulated to get the neighbor importance and four new metrics are presented. Our results show that there is almost no difference between the explanations provided by gradient-based techniques in the GNN domain. In addition, many explainability techniques failed to identify important neighbors when GNNs without self-loops are used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge