Evaluating Machine Learning Approaches for ASCII Art Generation

Paper and Code

Mar 18, 2025

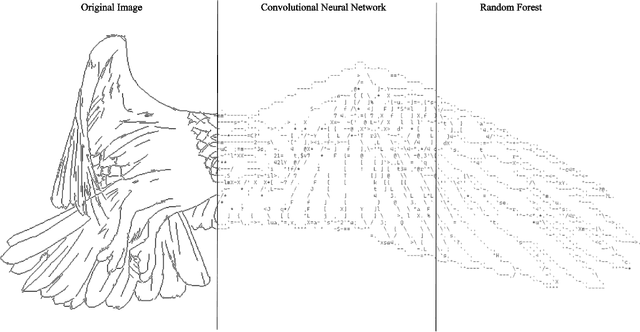

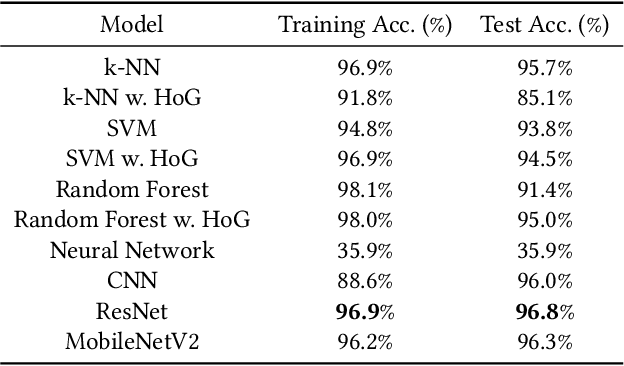

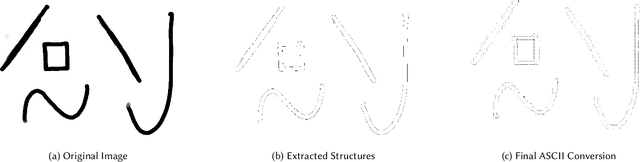

Generating structured ASCII art using computational techniques demands a careful interplay between aesthetic representation and computational precision, requiring models that can effectively translate visual information into symbolic text characters. Although Convolutional Neural Networks (CNNs) have shown promise in this domain, the comparative performance of deep learning architectures and classical machine learning methods remains unexplored. This paper explores the application of contemporary ML and DL methods to generate structured ASCII art, focusing on three key criteria: fidelity, character classification accuracy, and output quality. We investigate deep learning architectures, including Multilayer Perceptrons (MLPs), ResNet, and MobileNetV2, alongside classical approaches such as Random Forests, Support Vector Machines (SVMs) and k-Nearest Neighbors (k-NN), trained on an augmented synthetic dataset of ASCII characters. Our results show that complex neural network architectures often fall short in producing high-quality ASCII art, whereas classical machine learning classifiers, despite their simplicity, achieve performance similar to CNNs. Our findings highlight the strength of classical methods in bridging model simplicity with output quality, offering new insights into ASCII art synthesis and machine learning on image data with low dimensionality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge