Evaluating Classification Systems Against Soft Labels with Fuzzy Precision and Recall

Paper and Code

Sep 25, 2023

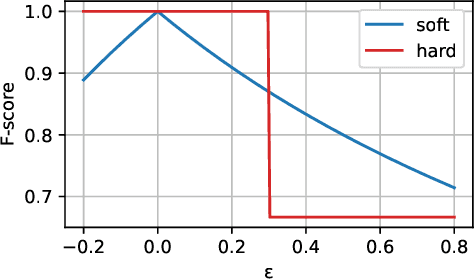

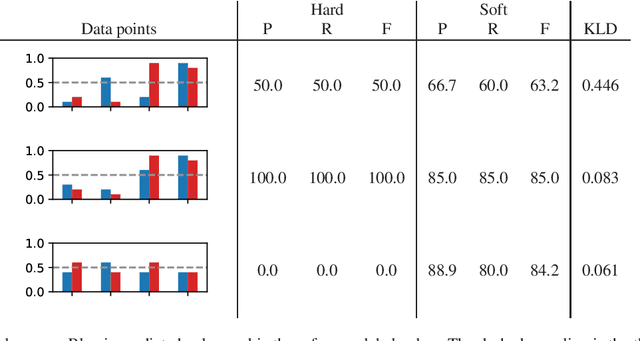

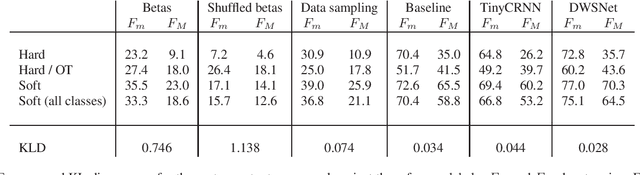

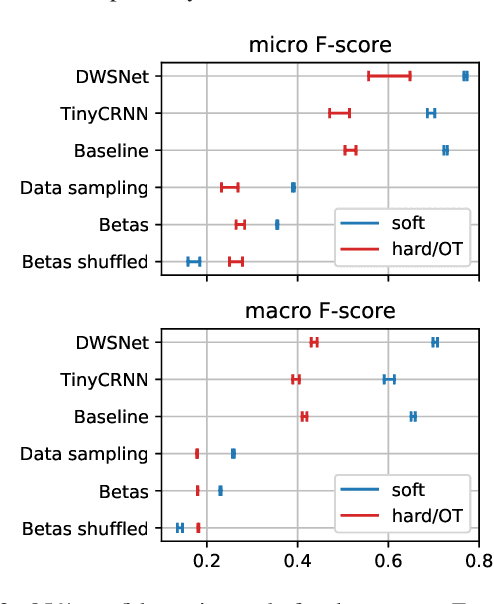

Classification systems are normally trained by minimizing the cross-entropy between system outputs and reference labels, which makes the Kullback-Leibler divergence a natural choice for measuring how closely the system can follow the data. Precision and recall provide another perspective for measuring the performance of a classification system. Non-binary references can arise from various sources, and it is often beneficial to use the soft labels for training instead of the binarized data. However, the existing definitions for precision and recall require binary reference labels, and binarizing the data can cause erroneous interpretations. We present a novel method to calculate precision, recall and F-score without quantizing the data. The proposed metrics extend the well established metrics as the definitions coincide when used with binary labels. To understand the behavior of the metrics we show simple example cases and an evaluation of different sound event detection models trained on real data with soft labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge