Entropy-Regularized Partially Observed Markov Decision Processes

Paper and Code

Dec 22, 2021

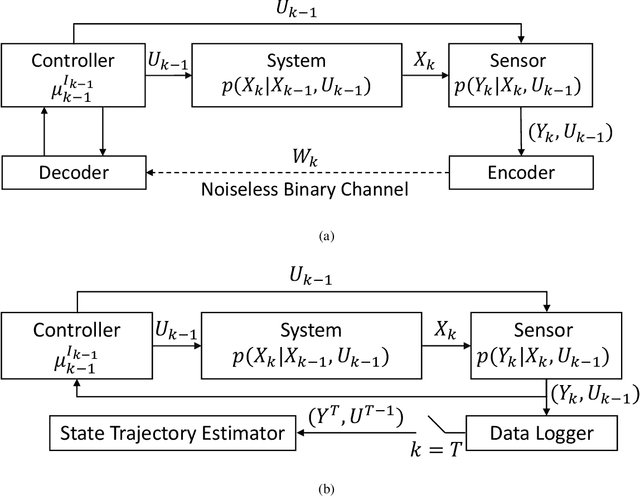

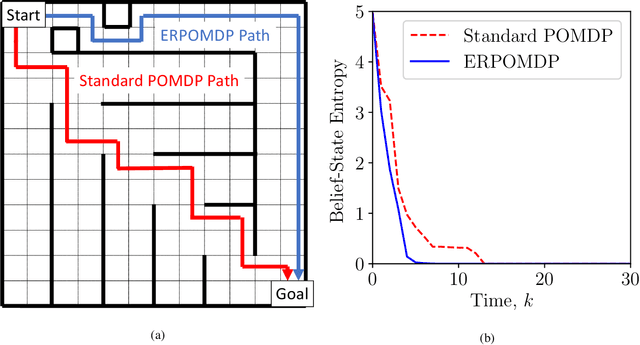

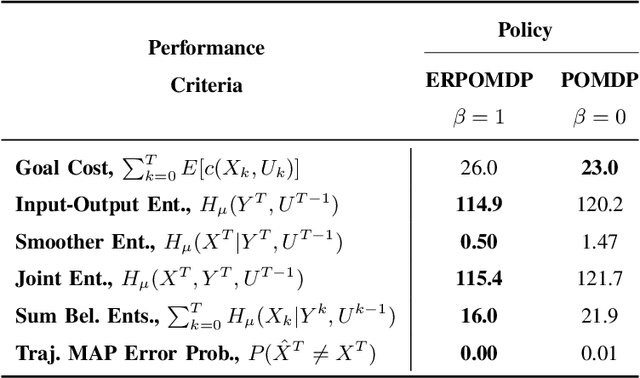

We investigate partially observed Markov decision processes (POMDPs) with cost functions regularized by entropy terms describing state, observation, and control uncertainty. Standard POMDP techniques are shown to offer bounded-error solutions to these entropy-regularized POMDPs, with exact solutions when the regularization involves the joint entropy of the state, observation, and control trajectories. Our joint-entropy result is particularly surprising since it constitutes a novel, tractable formulation of active state estimation.

* 20 pages, 2 figures, submitted

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge