Enhancing the Traditional Chinese Medicine Capabilities of Large Language Model through Reinforcement Learning from AI Feedback

Paper and Code

Nov 01, 2024

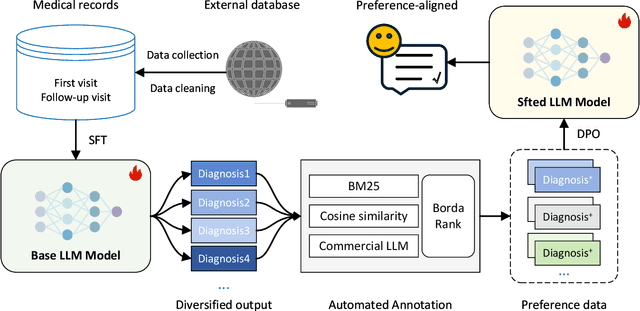

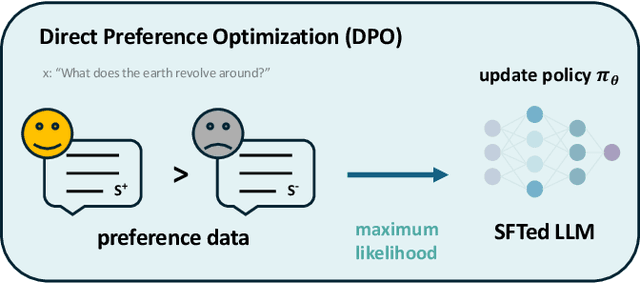

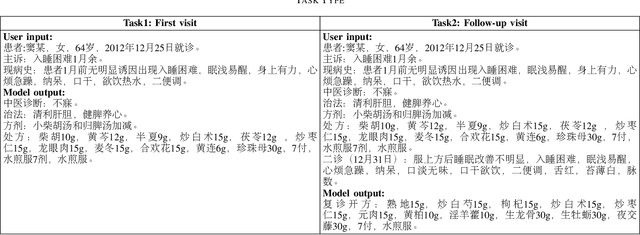

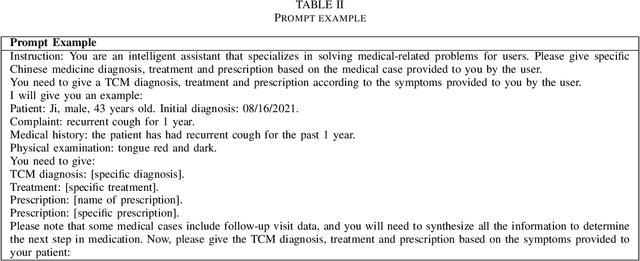

Although large language models perform well in understanding and responding to user intent, their performance in specialized domains such as Traditional Chinese Medicine (TCM) remains limited due to lack of expertise. In addition, high-quality data related to TCM is scarce and difficult to obtain, making large language models ineffective in handling TCM tasks. In this work, we propose a framework to improve the performance of large language models for TCM tasks using only a small amount of data. First, we use medical case data for supervised fine-tuning of the large model, making it initially capable of performing TCM tasks. Subsequently, we further optimize the model's performance using reinforcement learning from AI feedback (RLAIF) to align it with the preference data. The ablation study also demonstrated the performance gain is attributed to both supervised fine-tuning and the direct policy optimization. The experimental results show that the model trained with a small amount of data achieves a significant performance improvement on a representative TCM task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge