Enhance Multi-domain Sentiment Analysis of Review Texts through Prompting Strategies

Paper and Code

Sep 05, 2023

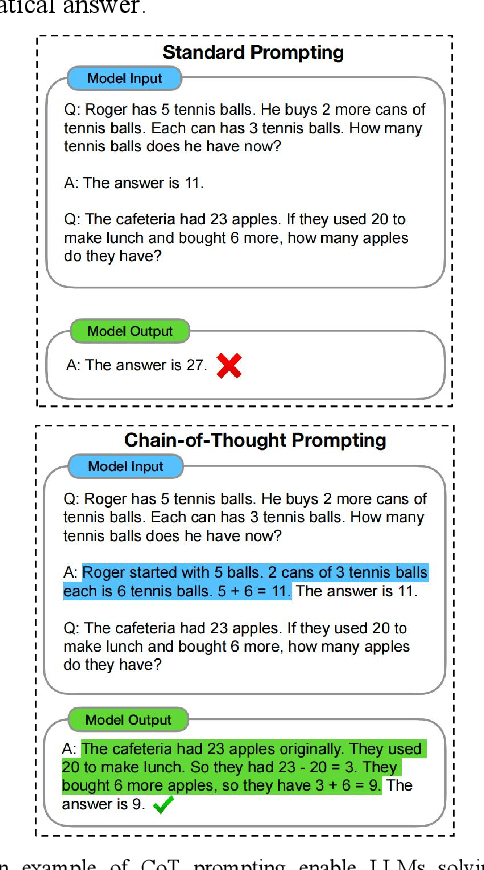

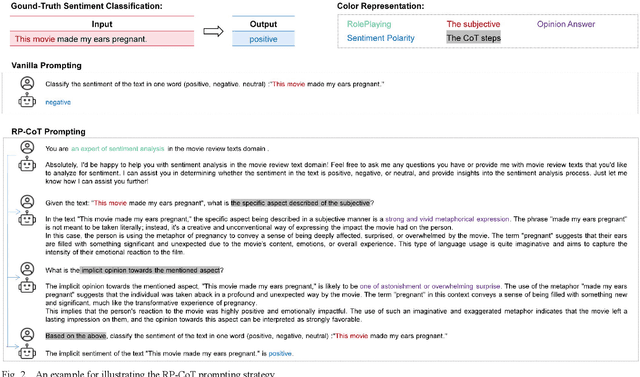

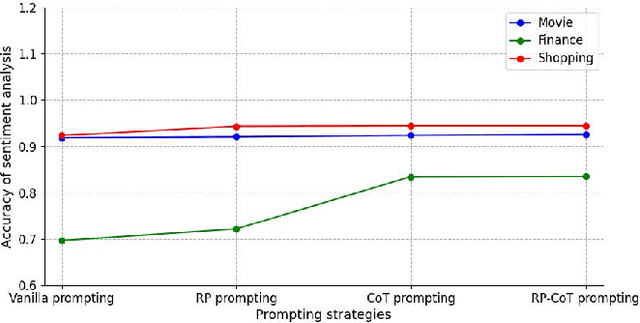

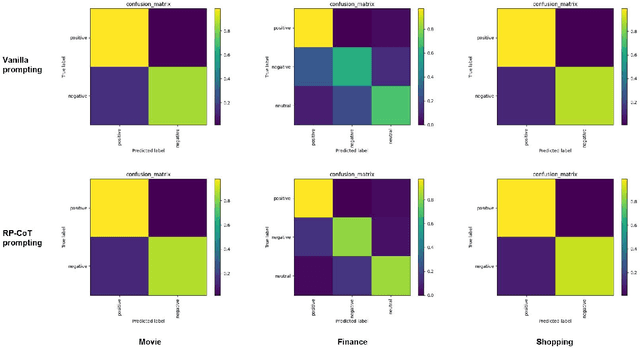

Large Language Models (LLMs) have made significant strides in both scientific research and practical applications. Existing studies have demonstrated the state-of-the-art (SOTA) performance of LLMs in various natural language processing tasks. However, the question of how to further enhance LLMs' performance in specific task using prompting strategies remains a pivotal concern. This paper explores the enhancement of LLMs' performance in sentiment analysis through the application of prompting strategies. We formulate the process of prompting for sentiment analysis tasks and introduce two novel strategies tailored for sentiment analysis: RolePlaying (RP) prompting and Chain-of-thought (CoT) prompting. Specifically, we also propose the RP-CoT prompting strategy which is a combination of RP prompting and CoT prompting. We conduct comparative experiments on three distinct domain datasets to evaluate the effectiveness of the proposed sentiment analysis strategies. The results demonstrate that the adoption of the proposed prompting strategies leads to a increasing enhancement in sentiment analysis accuracy. Further, the CoT prompting strategy exhibits a notable impact on implicit sentiment analysis, with the RP-CoT prompting strategy delivering the most superior performance among all strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge