End-Effect Exploration Drive for Effective Motor Learning

Paper and Code

Jun 29, 2020

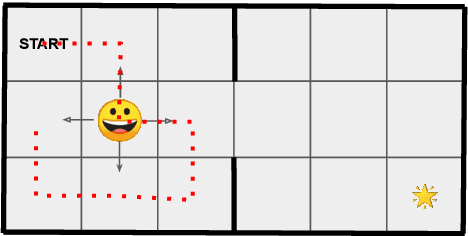

End-effect drives are proposed here as an effective way to implement goal-directed motor learning, in the absence of an explicit forward model. An end-effect model relies on a simple statistical recording of the effect of the current policy, here used as a substitute for the more resource-demanding forward models. When combined with a reward structure, it forms the core of a lightweight variational free energy minimization setup. The main difficulty lies in the maintenance of this simplified effect model together with the online update of the policy. When the prior target distribution is uniform, it provides a ways to learn an efficient exploration policy, consistently with the intrinsic curiosity principles. When combined with an extrinsic reward, our approach is finally shown to provide a faster training than traditional off-policy techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge