Emotion Recognition System from Speech and Visual Information based on Convolutional Neural Networks

Paper and Code

Feb 29, 2020

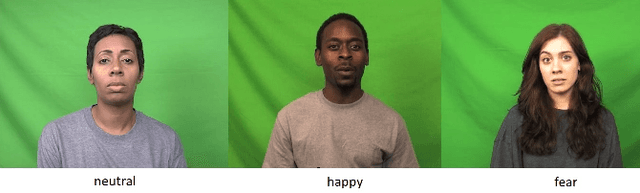

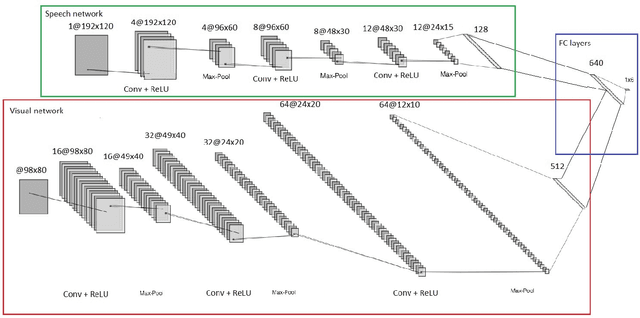

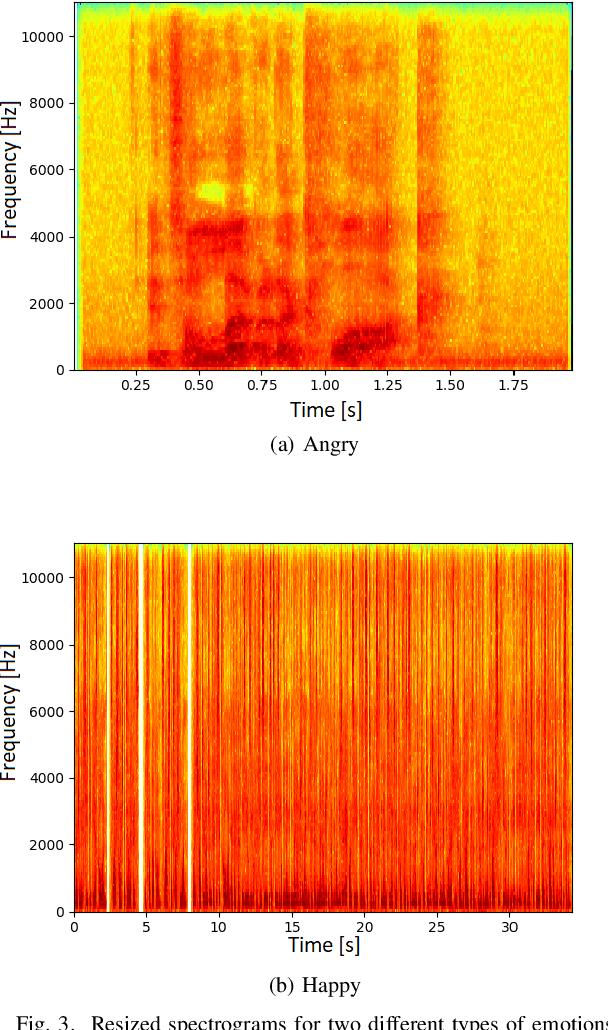

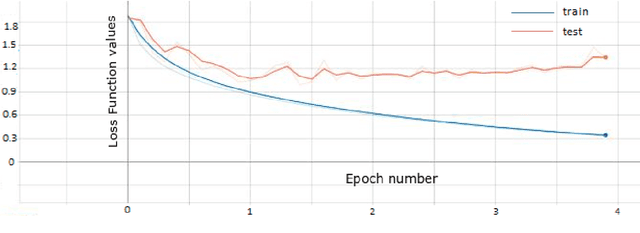

Emotion recognition has become an important field of research in the human-computer interactions domain. The latest advancements in the field show that combining visual with audio information lead to better results if compared to the case of using a single source of information separately. From a visual point of view, a human emotion can be recognized by analyzing the facial expression of the person. More precisely, the human emotion can be described through a combination of several Facial Action Units. In this paper, we propose a system that is able to recognize emotions with a high accuracy rate and in real time, based on deep Convolutional Neural Networks. In order to increase the accuracy of the recognition system, we analyze also the speech data and fuse the information coming from both sources, i.e., visual and audio. Experimental results show the effectiveness of the proposed scheme for emotion recognition and the importance of combining visual with audio data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge