Embedded Encoder-Decoder in Convolutional Networks Towards Explainable AI

Paper and Code

Jun 19, 2020

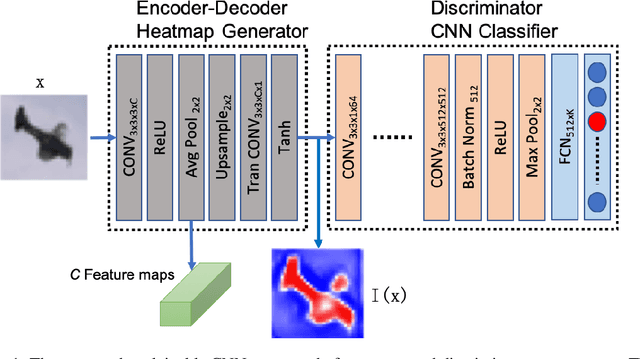

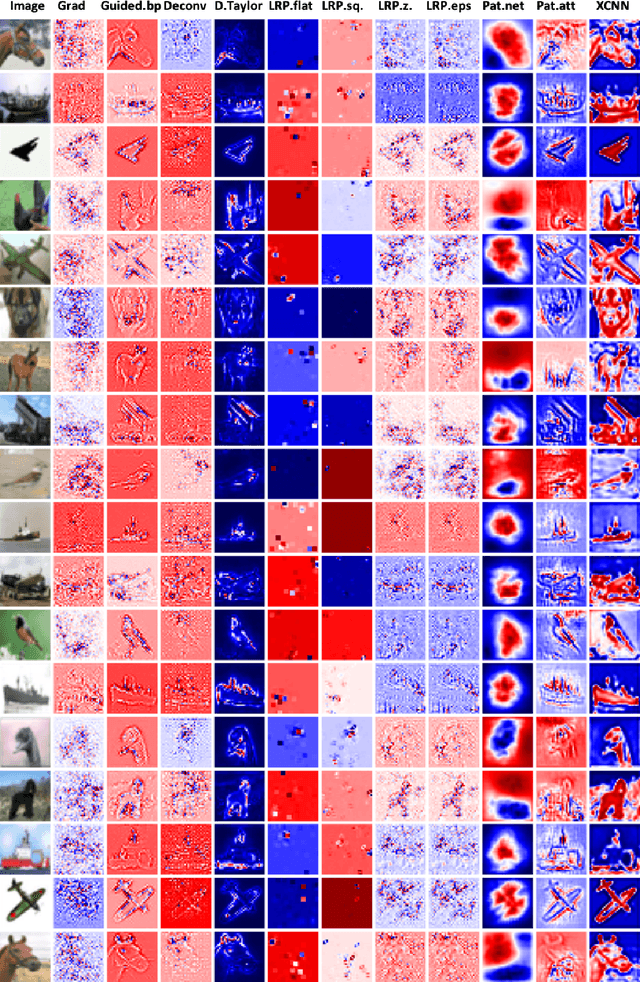

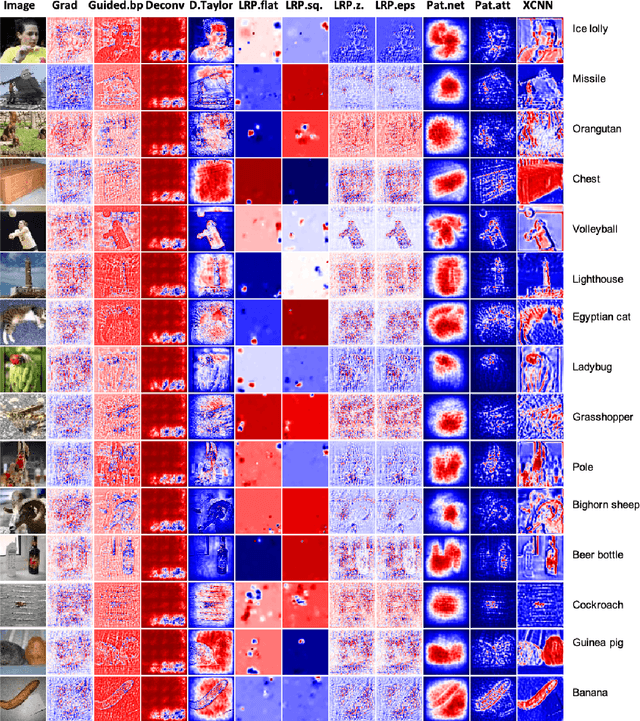

Understanding intermediate layers of a deep learning model and discovering the driving features of stimuli have attracted much interest, recently. Explainable artificial intelligence (XAI) provides a new way to open an AI black box and makes a transparent and interpretable decision. This paper proposes a new explainable convolutional neural network (XCNN) which represents important and driving visual features of stimuli in an end-to-end model architecture. This network employs encoder-decoder neural networks in a CNN architecture to represent regions of interest in an image based on its category. The proposed model is trained without localization labels and generates a heat-map as part of the network architecture without extra post-processing steps. The experimental results on the CIFAR-10, Tiny ImageNet, and MNIST datasets showed the success of our algorithm (XCNN) to make CNNs explainable. Based on visual assessment, the proposed model outperforms the current algorithms in class-specific feature representation and interpretable heatmap generation while providing a simple and flexible network architecture. The initial success of this approach warrants further study to enhance weakly supervised localization and semantic segmentation in explainable frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge