ELM-Based Distributed Cooperative Learning Over Networks

Paper and Code

Nov 30, 2015

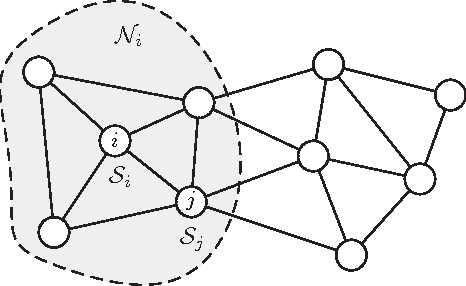

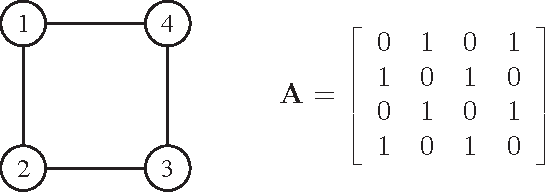

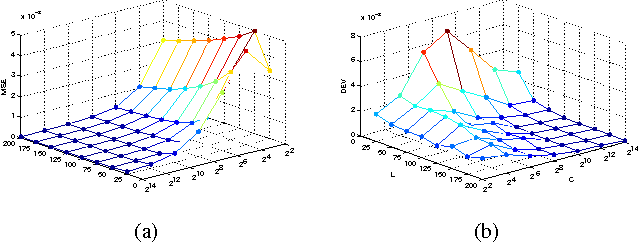

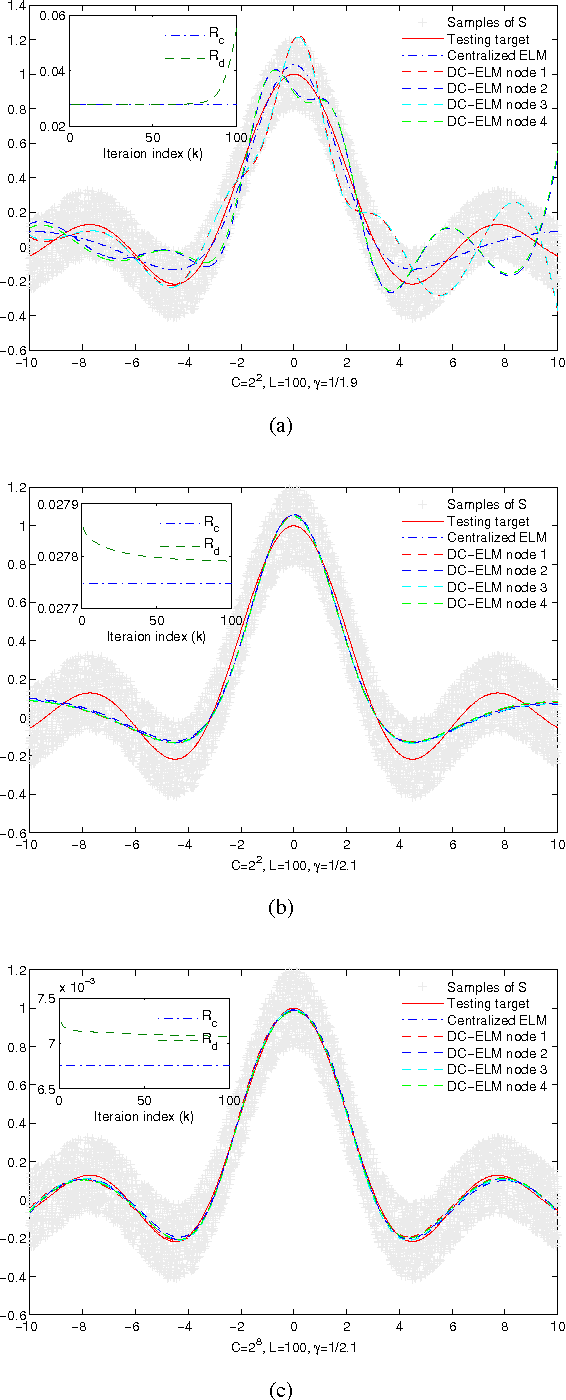

This paper investigates distributed cooperative learning algorithms for data processing in a network setting. Specifically, the extreme learning machine (ELM) is introduced to train a set of data distributed across several components, and each component runs a program on a subset of the entire data. In this scheme, there is no requirement for a fusion center in the network due to e.g., practical limitations, security, or privacy reasons. We first reformulate the centralized ELM training problem into a separable form among nodes with consensus constraints. Then, we solve the equivalent problem using distributed optimization tools. A new distributed cooperative learning algorithm based on ELM, called DC-ELM, is proposed. The architecture of this algorithm differs from that of some existing parallel/distributed ELMs based on MapReduce or cloud computing. We also present an online version of the proposed algorithm that can learn data sequentially in a one-by-one or chunk-by-chunk mode. The novel algorithm is well suited for potential applications such as artificial intelligence, computational biology, finance, wireless sensor networks, and so on, involving datasets that are often extremely large, high-dimensional and located on distributed data sources. We show simulation results on both synthetic and real-world data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge