$\ell_1$-norm constrained multi-block sparse canonical correlation analysis via proximal gradient descent

Paper and Code

Jan 14, 2022

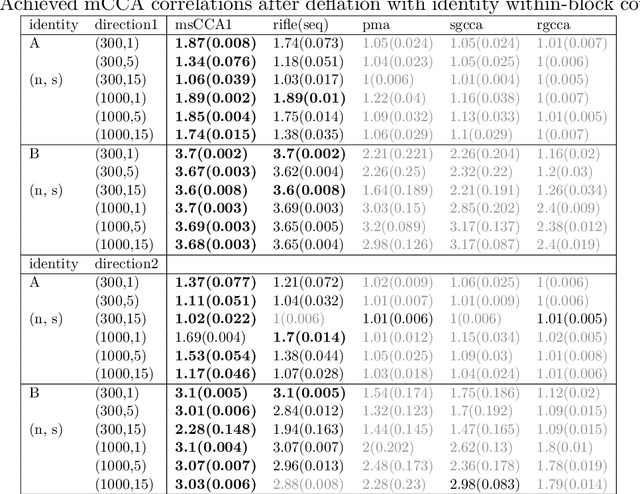

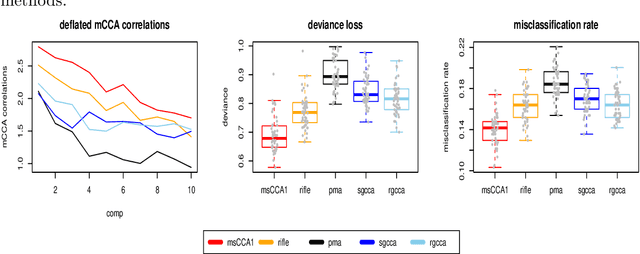

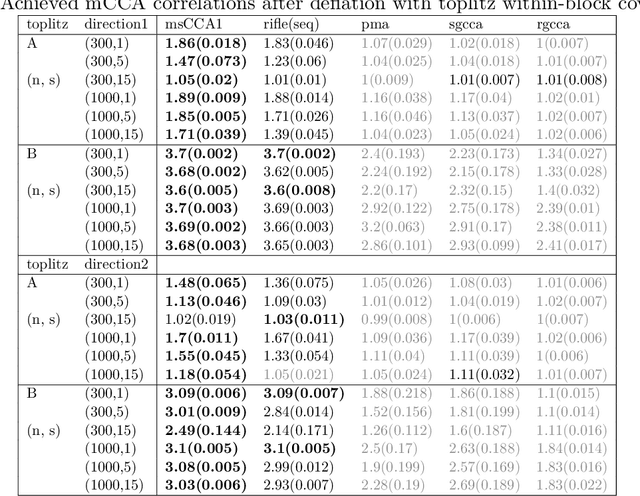

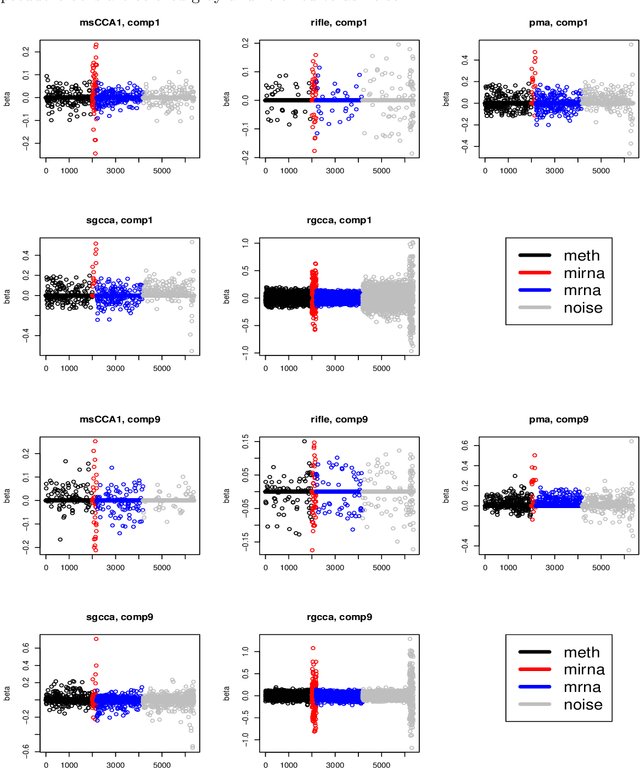

Multi-block CCA constructs linear relationships explaining coherent variations across multiple blocks of data. We view the multi-block CCA problem as finding leading generalized eigenvectors and propose to solve it via a proximal gradient descent algorithm with $\ell_1$ constraint for high dimensional data. In particular, we use a decaying sequence of constraints over proximal iterations, and show that the resulting estimate is rate-optimal under suitable assumptions. Although several previous works have demonstrated such optimality for the $\ell_0$ constrained problem using iterative approaches, the same level of theoretical understanding for the $\ell_1$ constrained formulation is still lacking. We also describe an easy-to-implement deflation procedure to estimate multiple eigenvectors sequentially. We compare our proposals to several existing methods whose implementations are available on R CRAN, and the proposed methods show competitive performances in both simulations and a real data example.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge