Elastic regularization in restricted Boltzmann machines: Dealing with $p\gg N$

Paper and Code

Oct 21, 2015

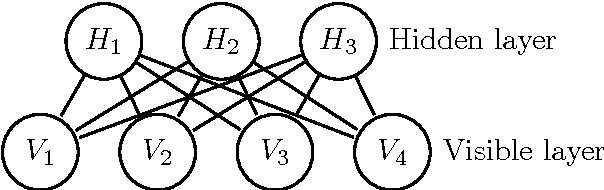

Restricted Boltzmann machines (RBMs) are endowed with the universal power of modeling (binary) joint distributions. Meanwhile, as a result of their confining network structure, training RBMs confronts less difficulties (compared with more complicated models, e.g., Boltzmann machines) when dealing with approximation and inference issues. However, in certain computational biology scenarios, such as the cancer data analysis, employing RBMs to model data features may lose its efficacy due to the "$p\gg N$" problem, in which the number of features/predictors is much larger than the sample size. The "$p\gg N$" problem puts the bias-variance trade-off in a more crucial place when designing statistical learning methods. In this manuscript, we try to address this problem by proposing a novel RBM model, called elastic restricted Boltzmann machine (eRBM), which incorporates the elastic regularization term into the likelihood/cost function. We provide several theoretical analysis on the superiority of our model. Furthermore, attributed to the classic contrastive divergence (CD) algorithm, eRBMs can be trained efficiently. Our novel model is a promising method for future cancer data analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge