Ego-Motion Alignment from Face Detections for Collaborative Augmented Reality

Paper and Code

Oct 05, 2020

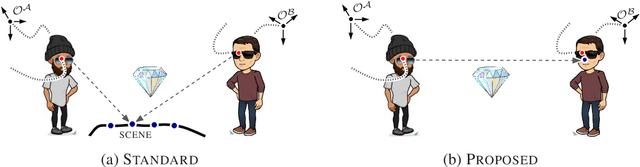

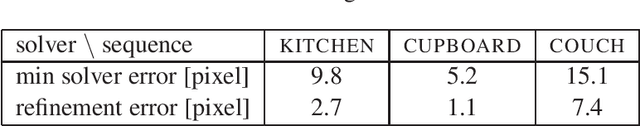

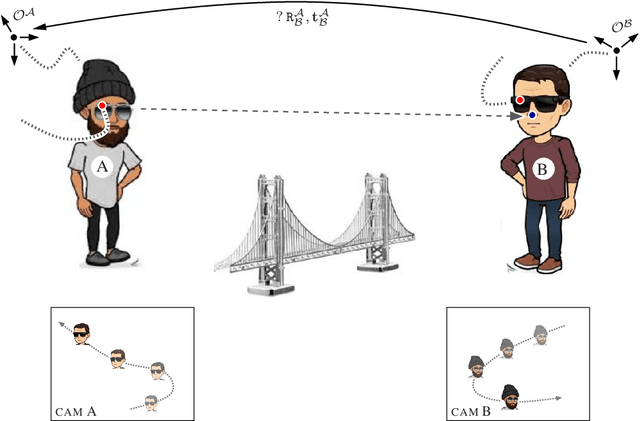

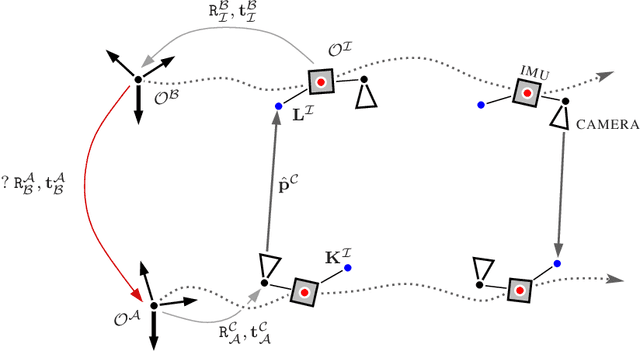

Sharing virtual content among multiple smart glasses wearers is an essential feature of a seamless Collaborative Augmented Reality experience. To enable the sharing, local coordinate systems of the underlying 6D ego-pose trackers, running independently on each set of glasses, have to be spatially and temporally aligned with respect to each other. In this paper, we propose a novel lightweight solution for this problem, which is referred as ego-motion alignment. We show that detecting each other's face or glasses together with tracker ego-poses sufficiently conditions the problem to spatially relate local coordinate systems. Importantly, the detected glasses can serve as reliable anchors to bring sufficient accuracy for the targeted practical use. The proposed idea allows us to abandon the traditional visual localization step with fiducial markers or scene points as anchors. A novel closed form minimal solver which solves a Quadratic Eigenvalue Problem is derived and its refinement with Gaussian Belief Propagation is introduced. Experiments validate the presented approach and show its high practical potential.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge