Efficient Transfer Learning Schemes for Personalized Language Modeling using Recurrent Neural Network

Paper and Code

Jan 13, 2017

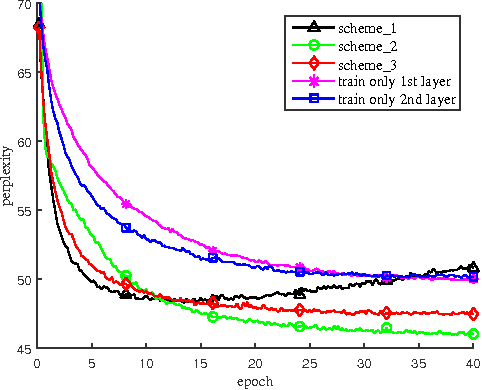

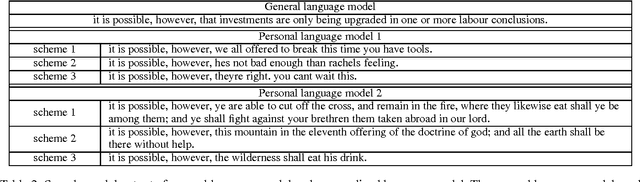

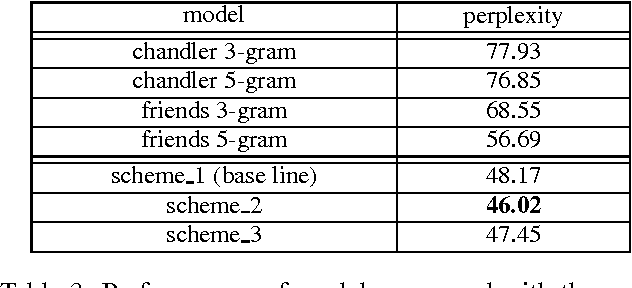

In this paper, we propose an efficient transfer leaning methods for training a personalized language model using a recurrent neural network with long short-term memory architecture. With our proposed fast transfer learning schemes, a general language model is updated to a personalized language model with a small amount of user data and a limited computing resource. These methods are especially useful for a mobile device environment while the data is prevented from transferring out of the device for privacy purposes. Through experiments on dialogue data in a drama, it is verified that our transfer learning methods have successfully generated the personalized language model, whose output is more similar to the personal language style in both qualitative and quantitative aspects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge