Efficient Image_Compression Using Advanced State Space Models

Paper and Code

Sep 04, 2024

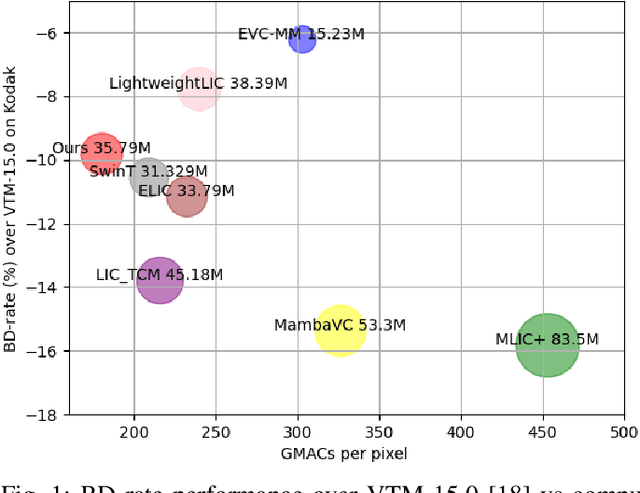

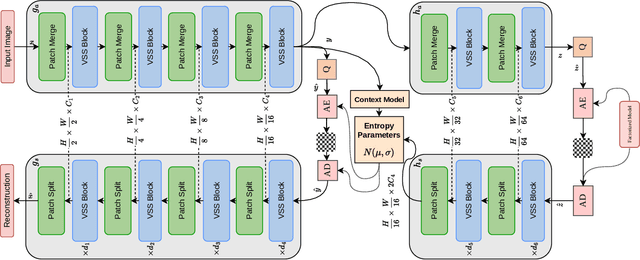

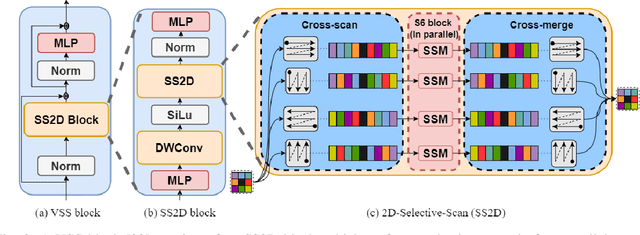

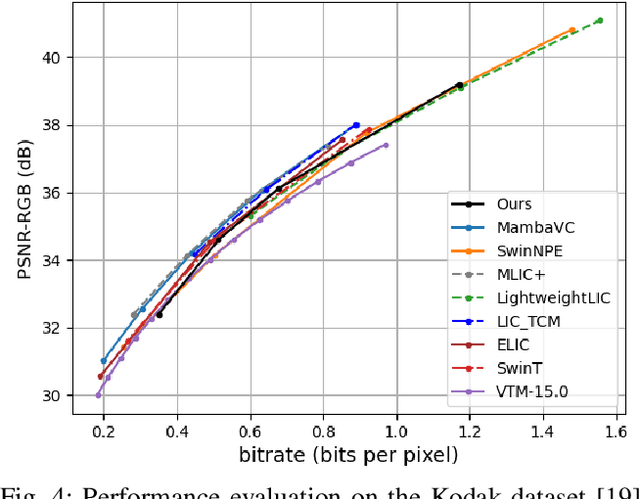

Transformers have led to learning-based image compression methods that outperform traditional approaches. However, these methods often suffer from high complexity, limiting their practical application. To address this, various strategies such as knowledge distillation and lightweight architectures have been explored, aiming to enhance efficiency without significantly sacrificing performance. This paper proposes a State Space Model-based Image Compression (SSMIC) architecture. This novel architecture balances performance and computational efficiency, making it suitable for real-world applications. Experimental evaluations confirm the effectiveness of our model in achieving a superior BD-rate while significantly reducing computational complexity and latency compared to competitive learning-based image compression methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge