Efficient Federated Learning over Multiple Access Channel with Differential Privacy Constraints

Paper and Code

May 15, 2020

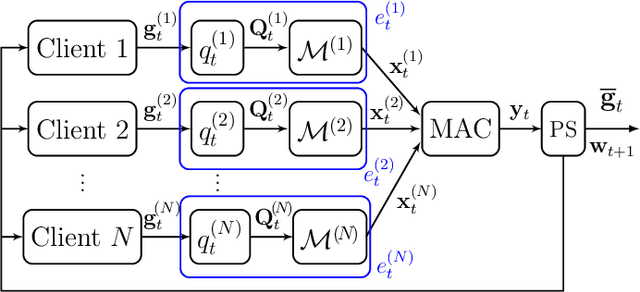

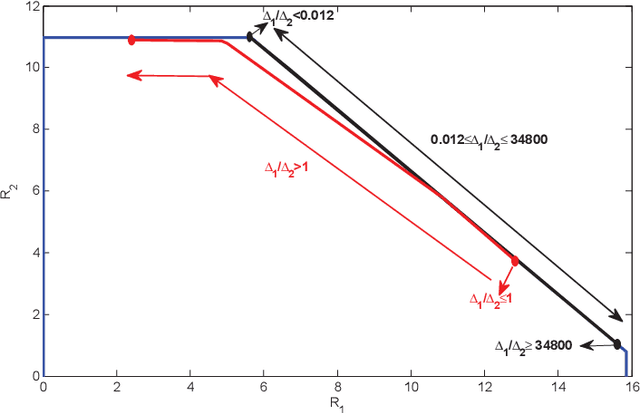

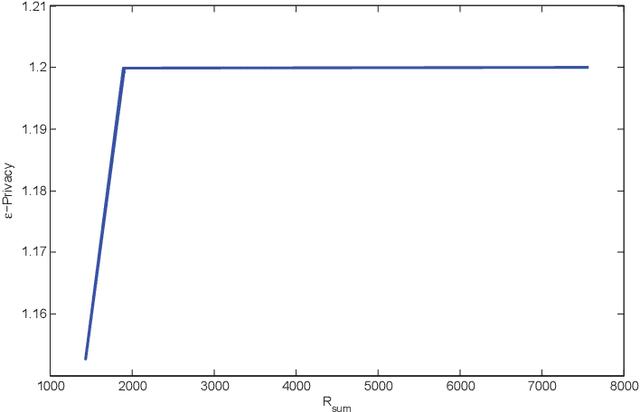

In this paper, the problem of federated learning (FL) over a multiple access channel (MAC) is considered. More precisely, we consider the FL setting in which clients are prompted to train a machine learning model by simultaneous communications with a parameter server (PS) with the aim of better utilizing the computational resources available in the network. We also consider the additional constraint in which the communication between the users and the PS is subject to a privacy constraint. To minimize the training loss while also satisfying the privacy rate constraint over the MAC channel, the distributed transmission of digital variants of stochastic gradient descents (D-DSGD) is performed by each client. Additionally, binomial noise is also added at each user to preserve the privacy of the transmission. The optimum levels of quantization in the D-DSGD and the binary noise parameters to achieve efficiency in terms of convergence are investigated, subject to privacy constraint and capacity limit of the MAC channel.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge