Efficient Exploration in Binary and Preferential Bayesian Optimization

Paper and Code

Oct 18, 2021

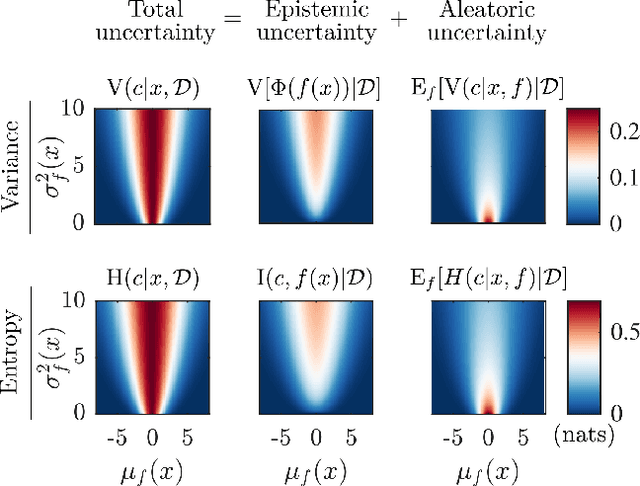

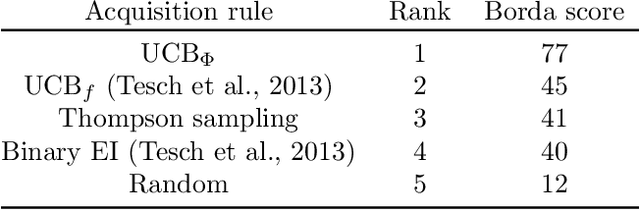

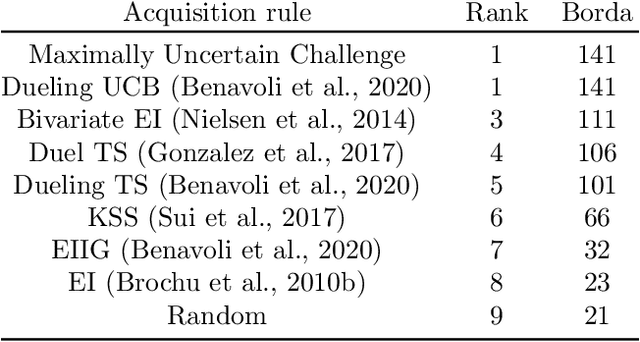

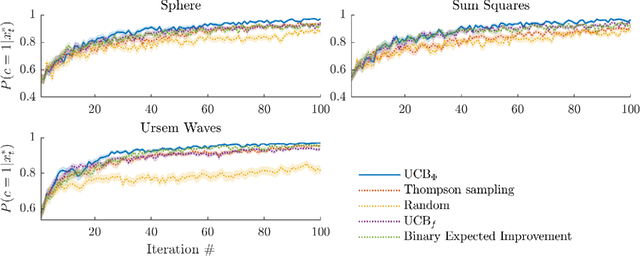

Bayesian optimization (BO) is an effective approach to optimize expensive black-box functions, that seeks to trade-off between exploitation (selecting parameters where the maximum is likely) and exploration (selecting parameters where we are uncertain about the objective function). In many real-world situations, direct measurements of the objective function are not possible, and only binary measurements such as success/failure or pairwise comparisons are available. To perform efficient exploration in this setting, we show that it is important for BO algorithms to distinguish between different types of uncertainty: epistemic uncertainty, about the unknown objective function, and aleatoric uncertainty, which comes from noisy observations and cannot be reduced. In effect, only the former is important for efficient exploration. Based on this, we propose several new acquisition functions that outperform state-of-the-art heuristics in binary and preferential BO, while being fast to compute and easy to implement. We then generalize these acquisition rules to batch learning, where multiple queries are performed simultaneously.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge