Efficient estimation of the ANOVA mean dimension, with an application to neural net classification

Paper and Code

Jul 02, 2020

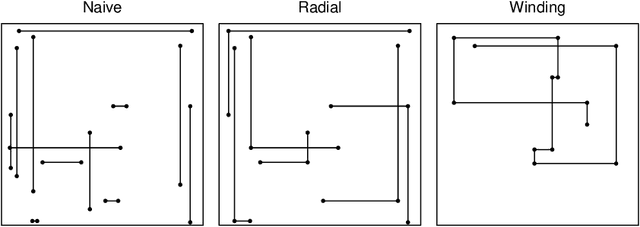

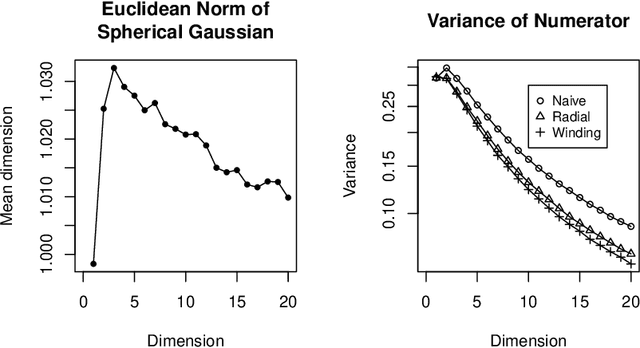

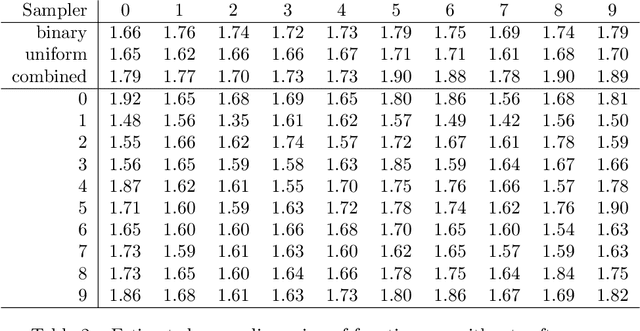

The mean dimension of a black box function of $d$ variables is a convenient way to summarize the extent to which it is dominated by high or low order interactions. It is expressed in terms of $2^d-1$ variance components but it can be written as the sum of $d$ Sobol' indices that can be estimated by leave one out methods. We compare the variance of these leave one out methods: a Gibbs sampler called winding stairs, a radial sampler that changes each variable one at a time from a baseline, and a naive sampler that never reuses function evaluations and so costs about double the other methods. For an additive function the radial and winding stairs are most efficient. For a multiplicative function the naive method can easily be most efficient if the factors have high kurtosis. As an illustration we consider the mean dimension of a neural network classifier of digits from the MNIST data set. The classifier is a function of $784$ pixels. For that problem, winding stairs is the best algorithm. We find that inputs to the final softmax layer have mean dimensions ranging from $1.35$ to $2.0$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge