Efficient Convolutional Neural Networks for Pixelwise Classification on Heterogeneous Hardware Systems

Paper and Code

Sep 11, 2015

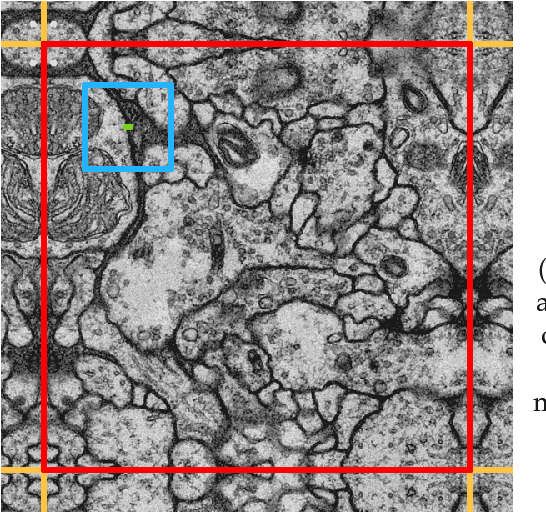

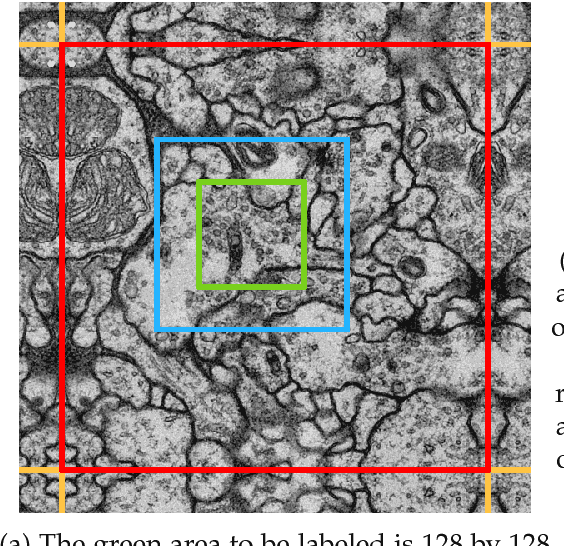

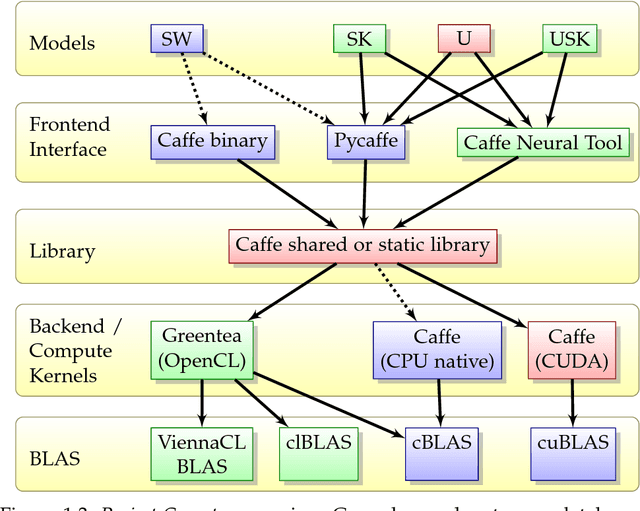

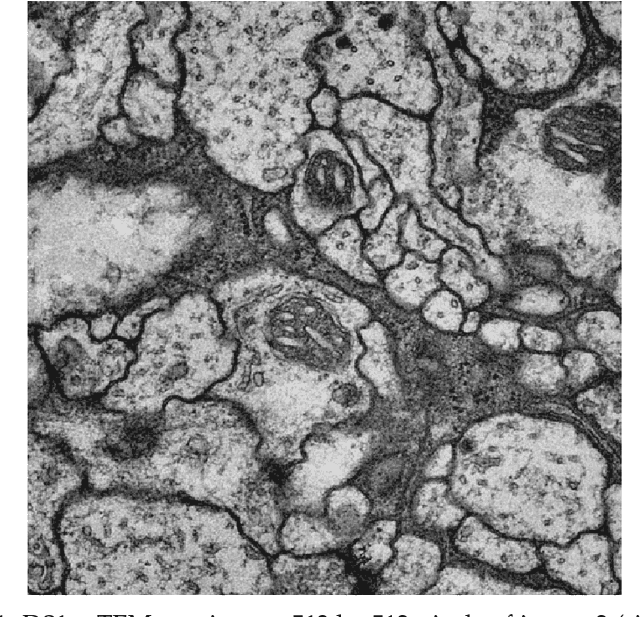

This work presents and analyzes three convolutional neural network (CNN) models for efficient pixelwise classification of images. When using convolutional neural networks to classify single pixels in patches of a whole image, a lot of redundant computations are carried out when using sliding window networks. This set of new architectures solve this issue by either removing redundant computations or using fully convolutional architectures that inherently predict many pixels at once. The implementations of the three models are accessible through a new utility on top of the Caffe library. The utility provides support for a wide range of image input and output formats, pre-processing parameters and methods to equalize the label histogram during training. The Caffe library has been extended by new layers and a new backend for availability on a wider range of hardware such as CPUs and GPUs through OpenCL. On AMD GPUs, speedups of $54\times$ (SK-Net), $437\times$ (U-Net) and $320\times$ (USK-Net) have been observed, taking the SK equivalent SW (sliding window) network as the baseline. The label throughput is up to one megapixel per second. The analyzed neural networks have distinctive characteristics that apply during training or processing, and not every data set is suitable to every architecture. The quality of the predictions is assessed on two neural tissue data sets, of which one is the ISBI 2012 challenge data set. Two different loss functions, Malis loss and Softmax loss, were used during training. The whole pipeline, consisting of models, interface and modified Caffe library, is available as Open Source software under the working title Project Greentea.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge